User Guide

Introduction

Overview

Welcome to the Camunda BPM user guide! Camunda BPM is a Java-based framework for process automation. This document contains information about the features provided by the Camunda BPM platform.

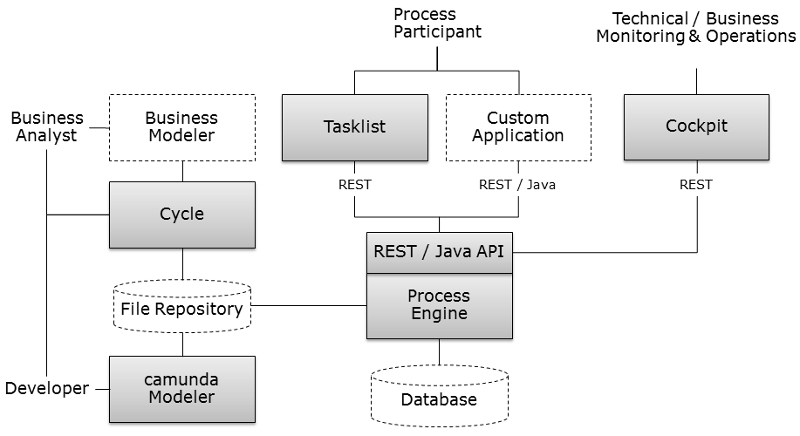

Camunda BPM is built around the process engine component. The following illustration shows the most important components of Camunda BPM along with some typical user roles.

Process Engine & Infrastructure

- Process Engine The process engine is a java library responsible for executing BPMN 2.0 processes and workflows. It has a lightweight POJO core and uses a relational database for persistence. ORM mapping is provided by the mybatis mapping framework.

- Spring Framework Integration

- CDI / Java EE Integration

- Runtime Container Integration (Integration with application server infrastructure.)

Web Applications

- REST API The REST API allows you to use the process engine from a remote application or a JavaScript application. (Note: The documentation of the REST API is factored out into an own document.)

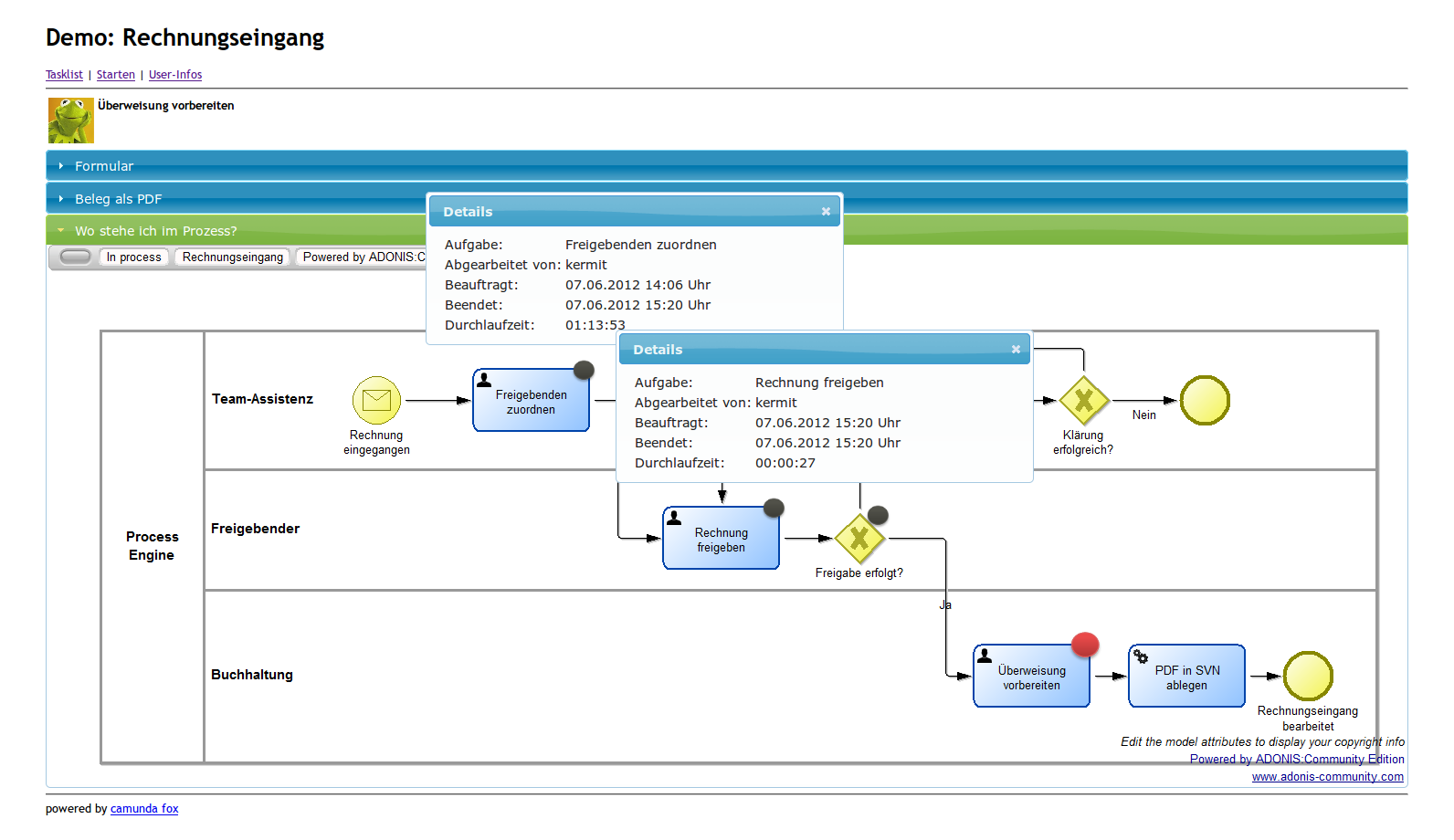

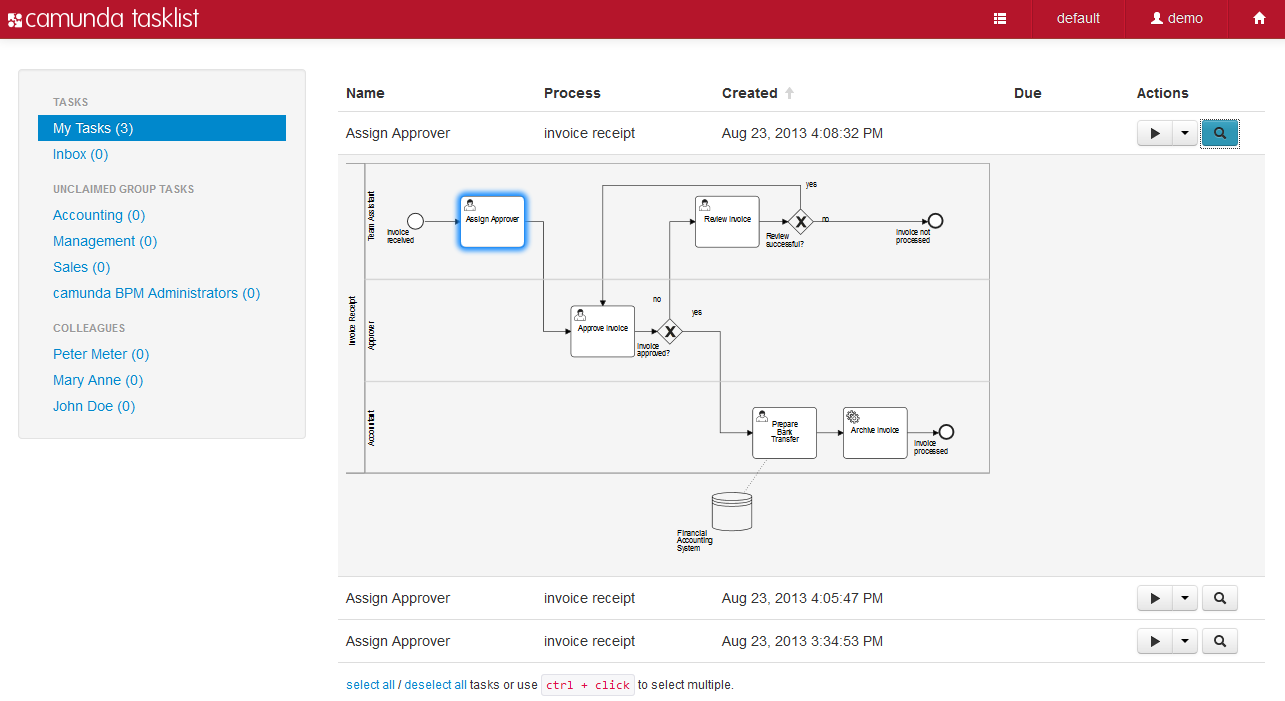

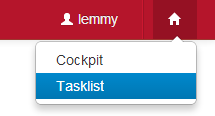

- Camunda Tasklist A web application for human workflow management and user tasks that allows process participants to inspect their workflow tasks and navigate to task forms in order to work on the tasks and provide data input.

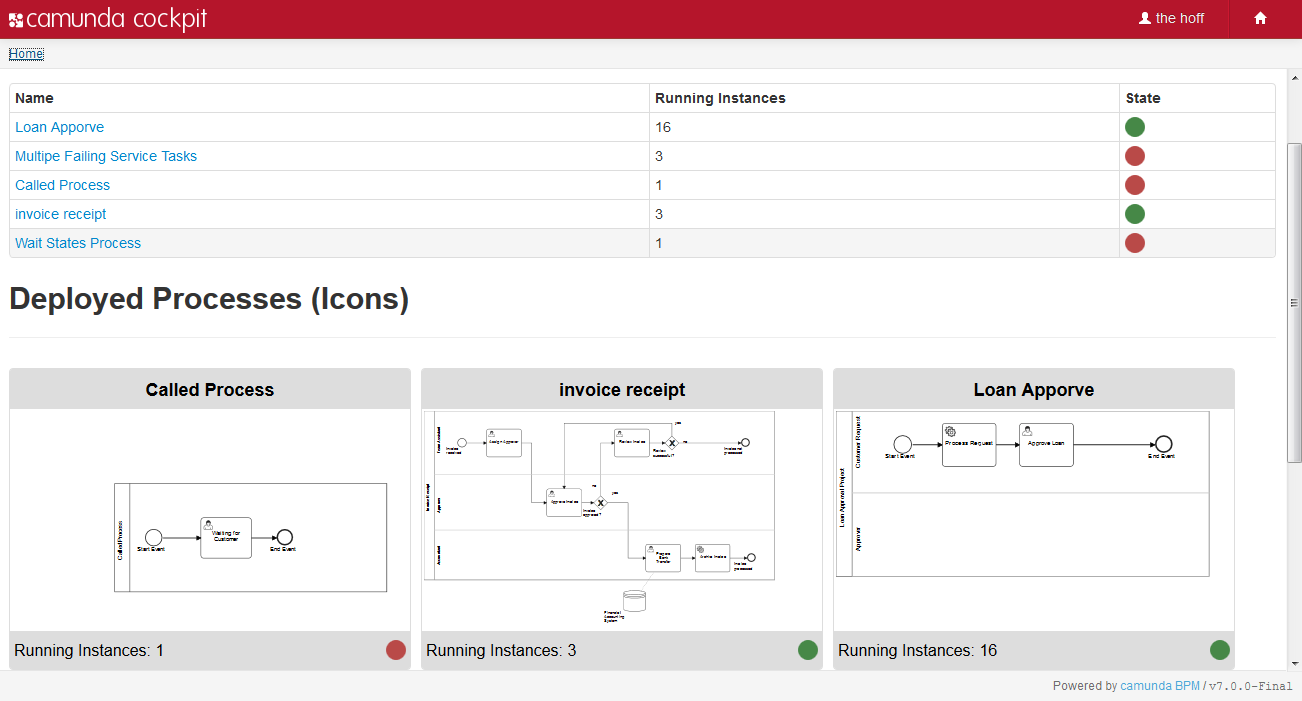

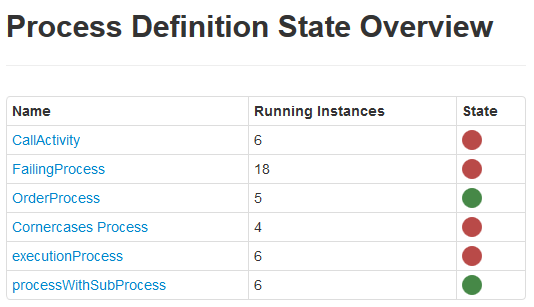

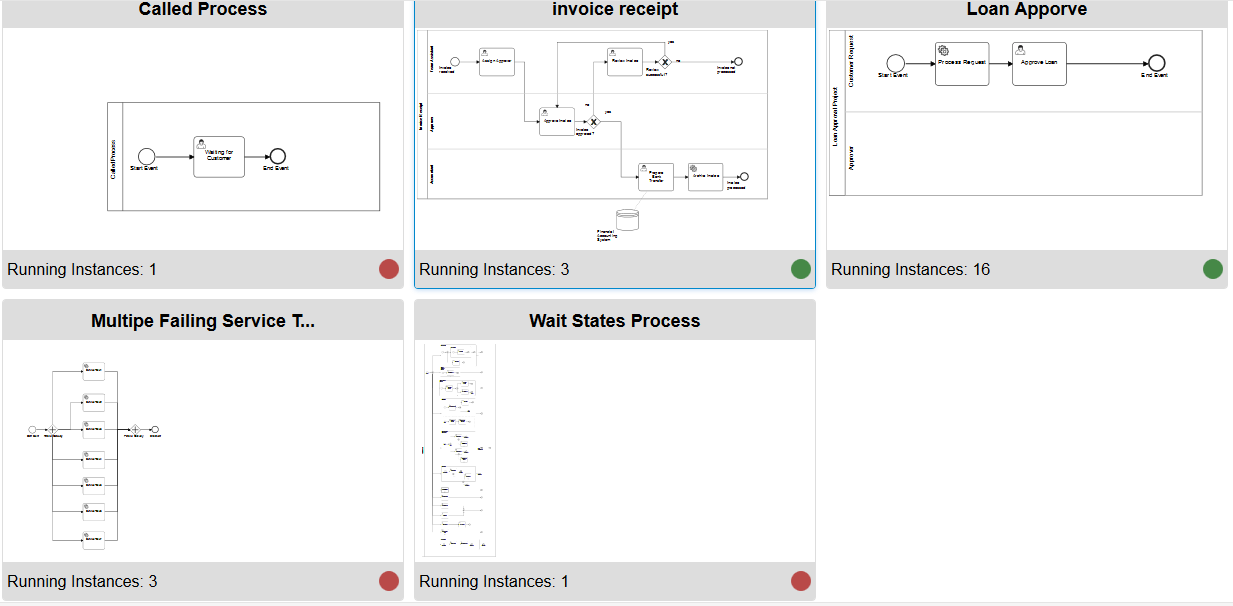

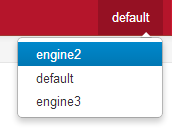

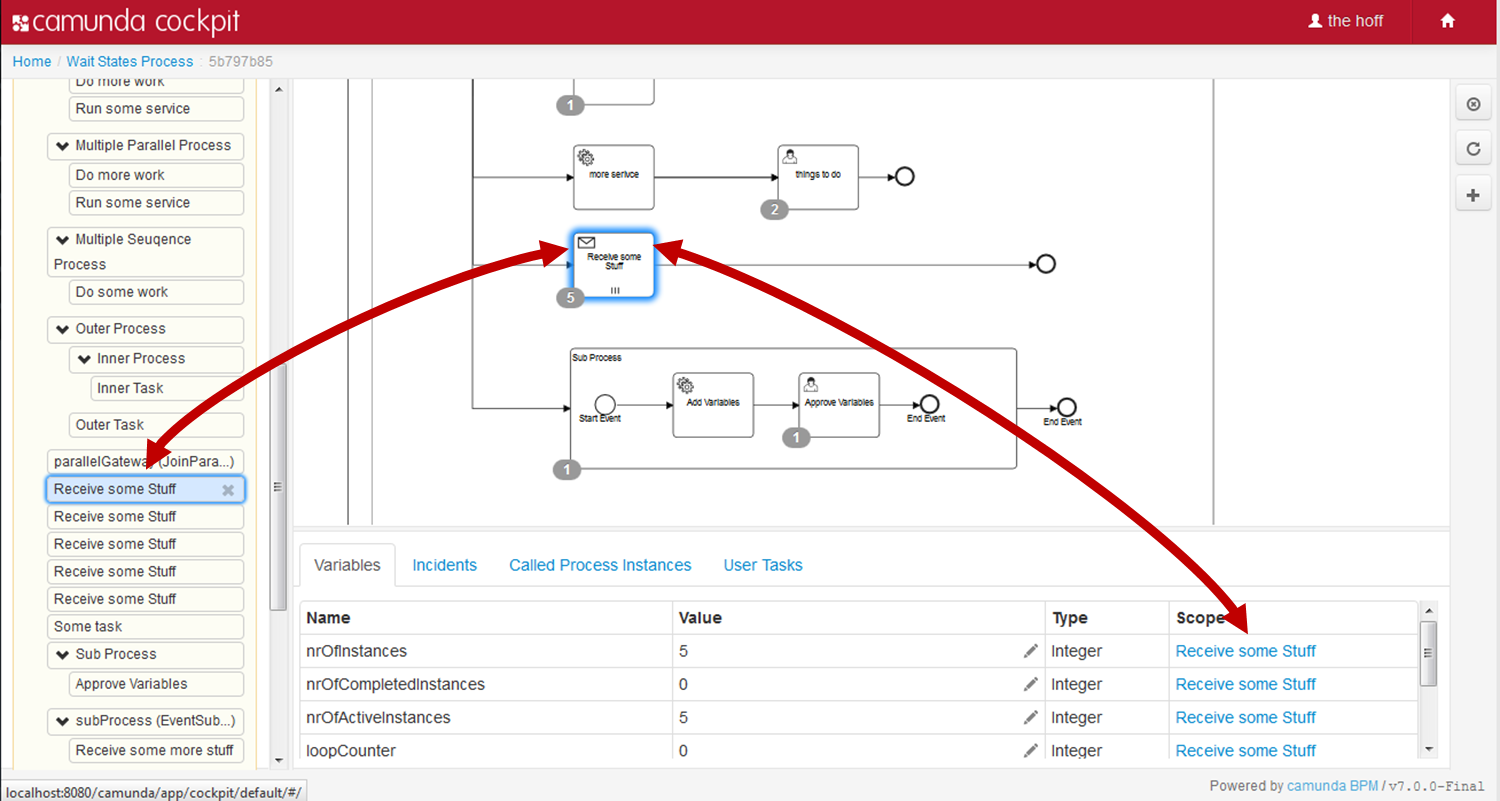

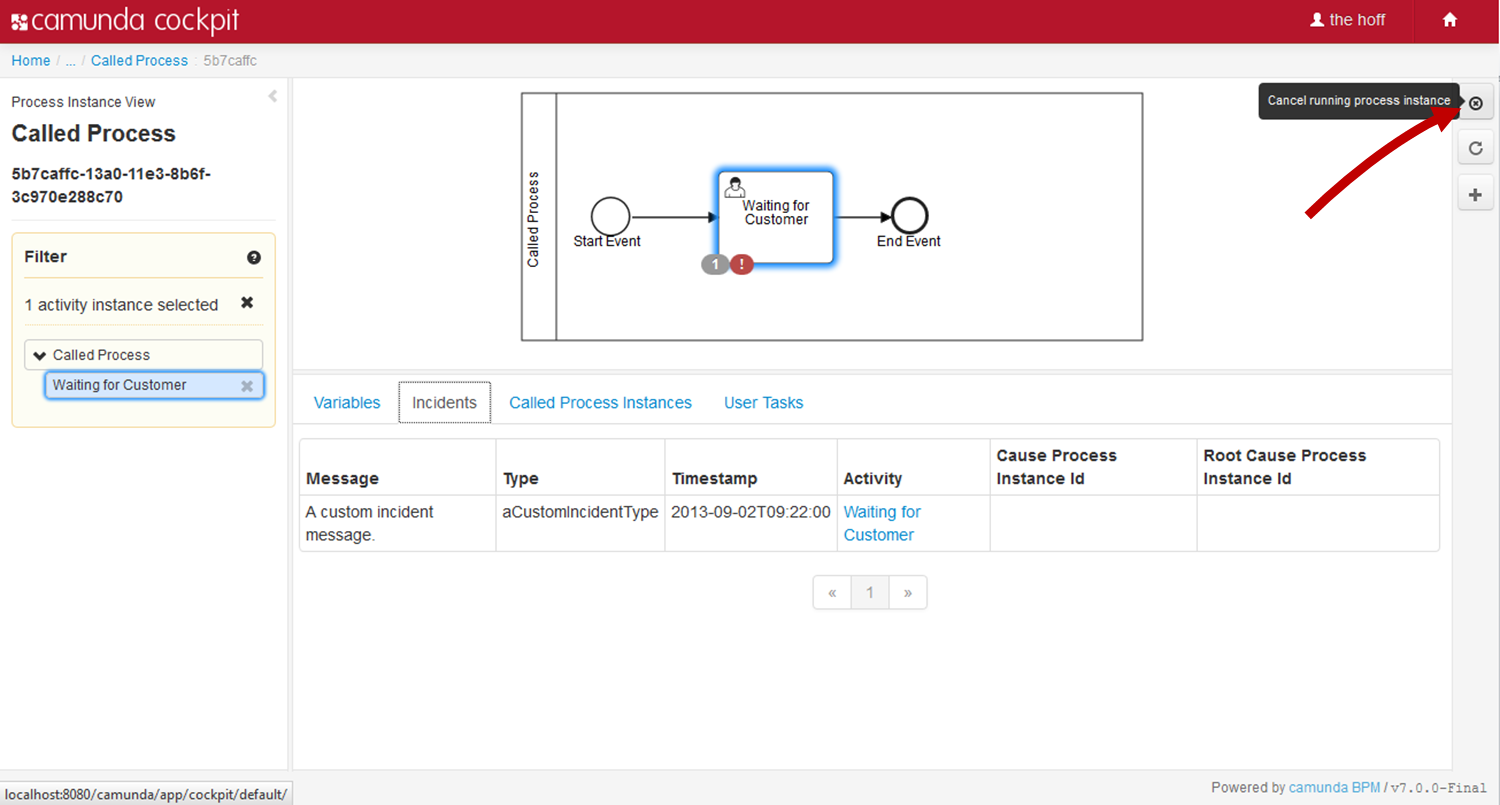

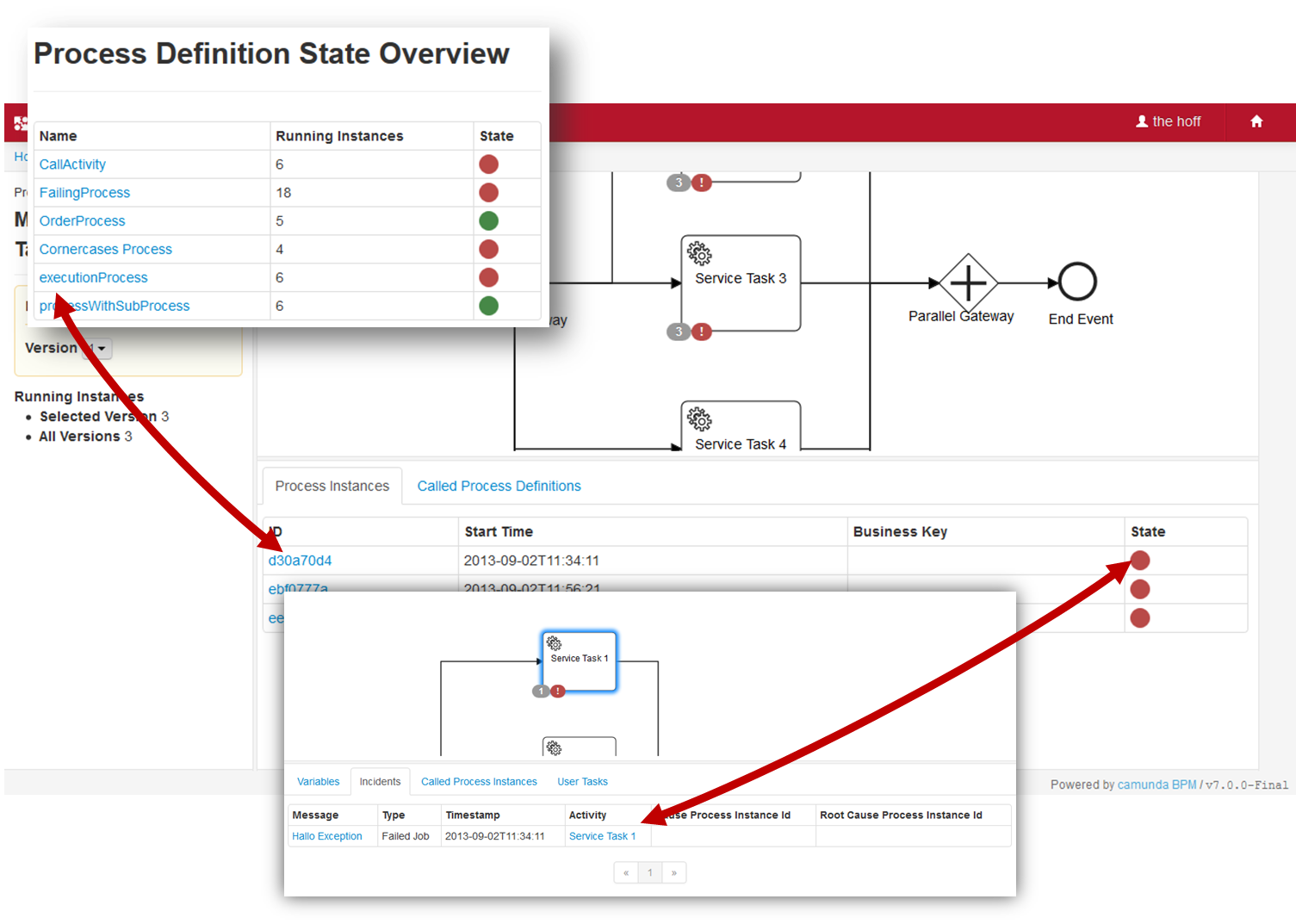

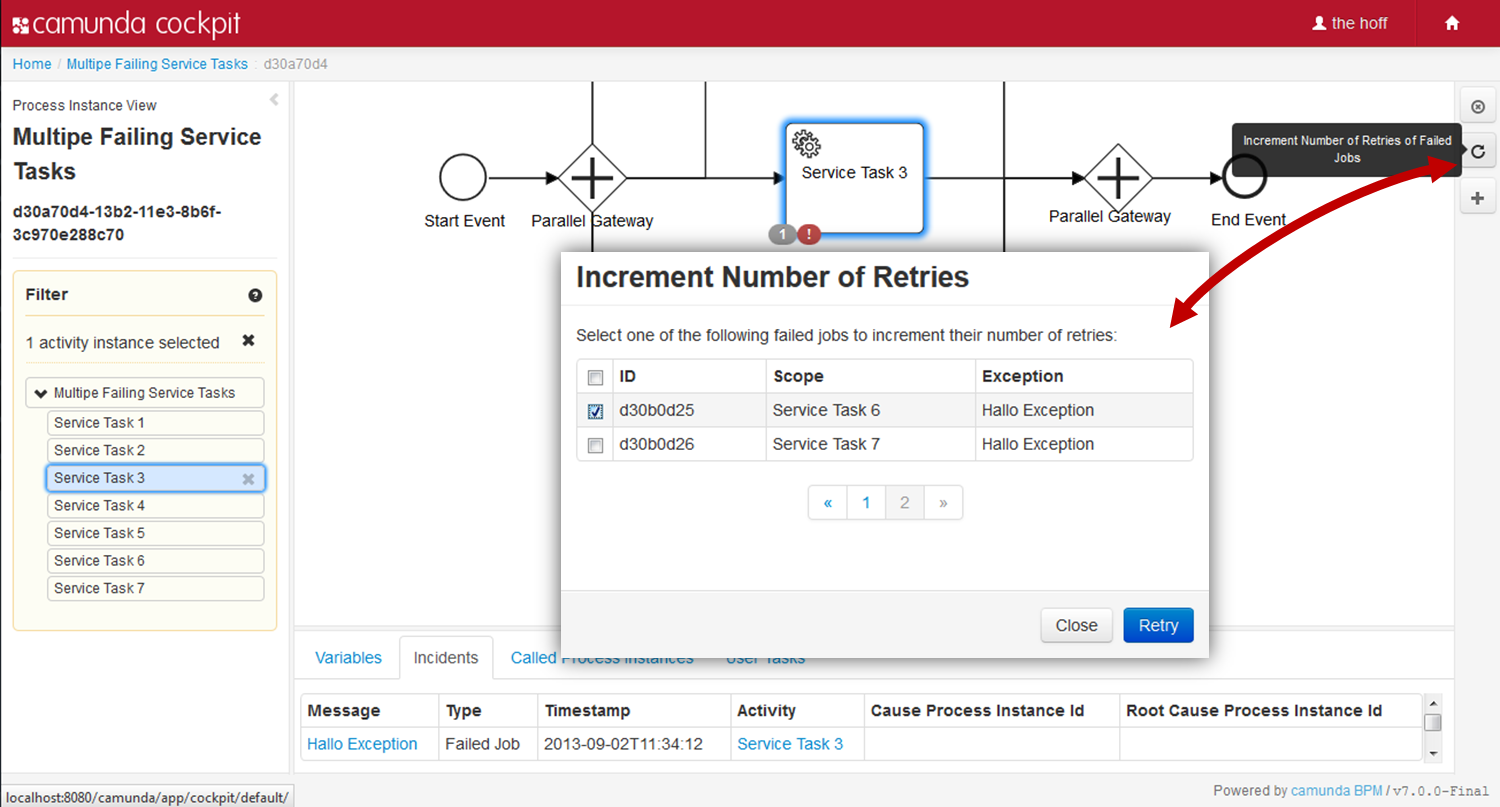

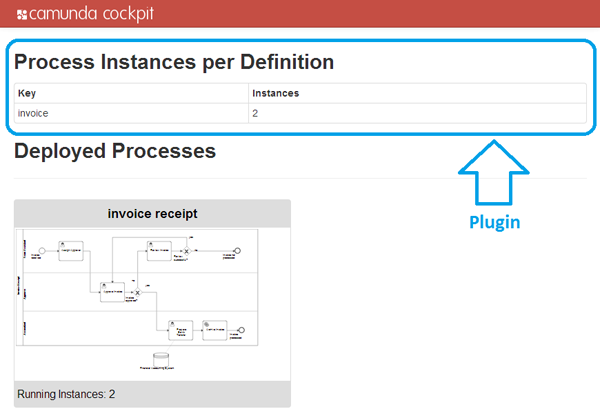

- Camunda Cockpit A web application for process monitoring and operations that allows you to search for process instances, inspect their state and repair broken instances.

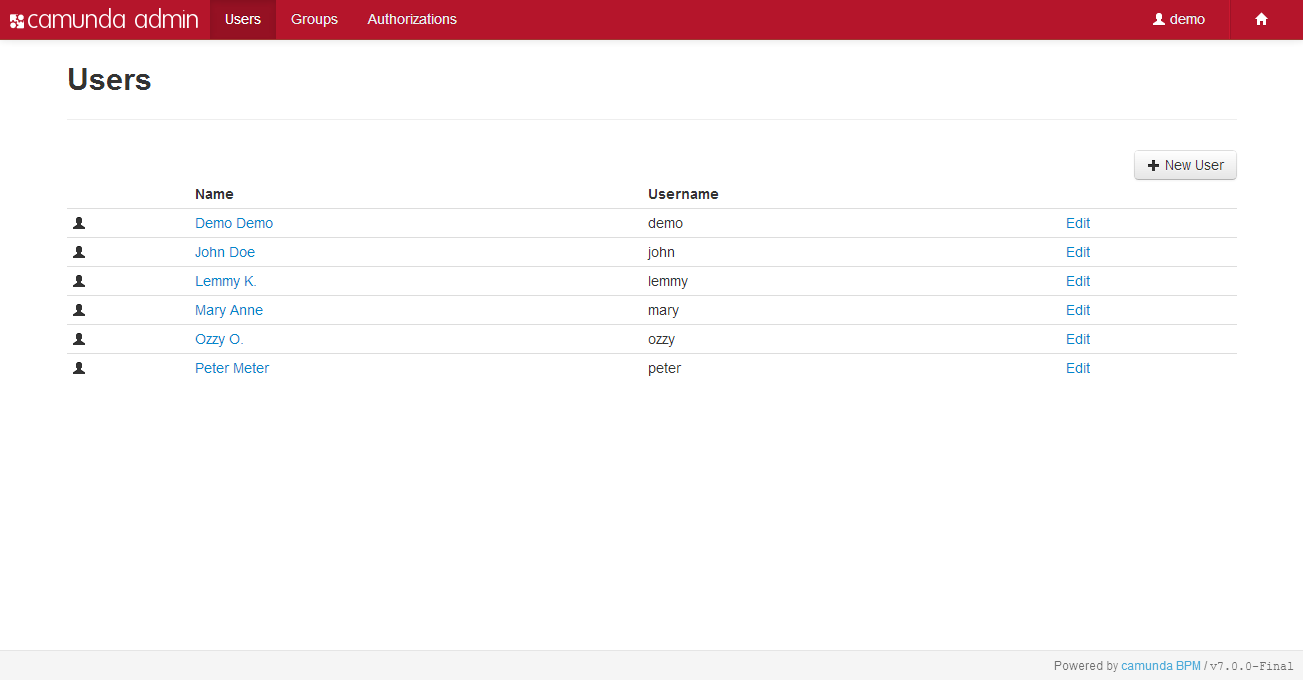

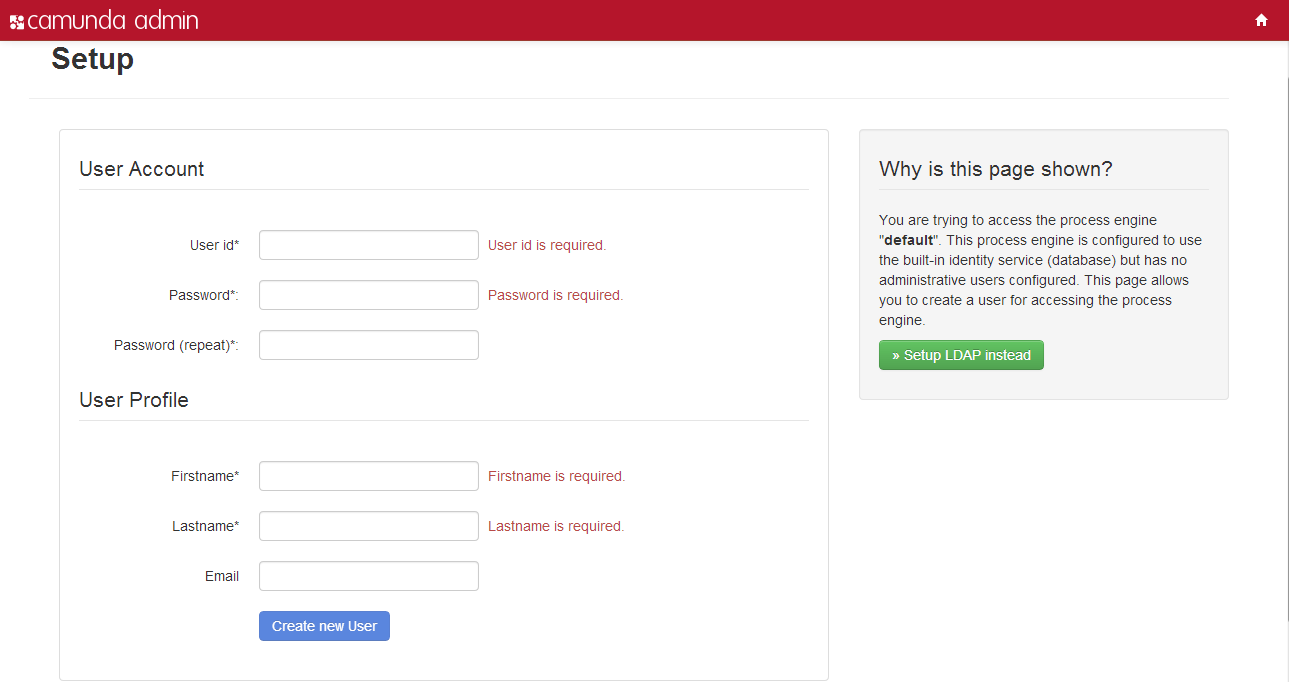

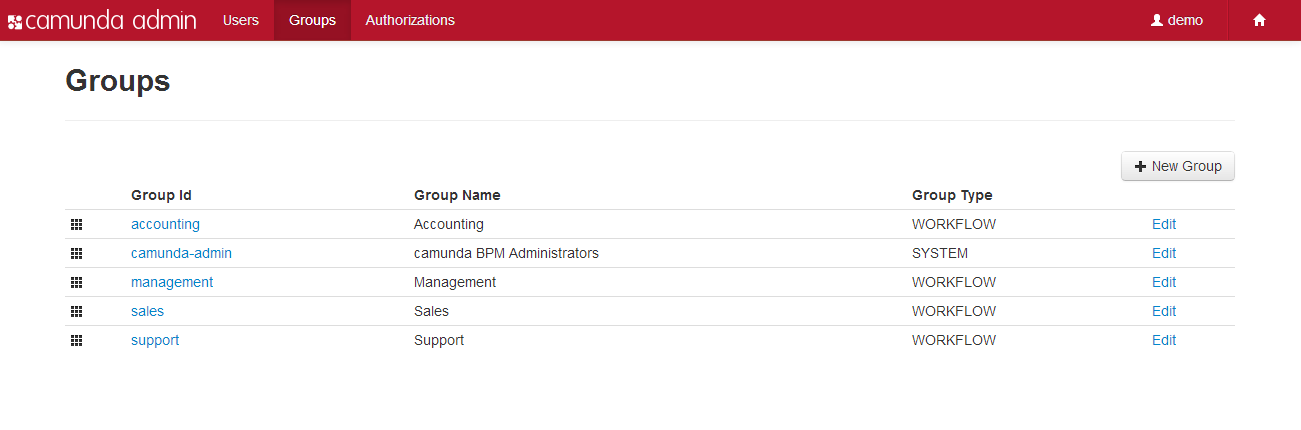

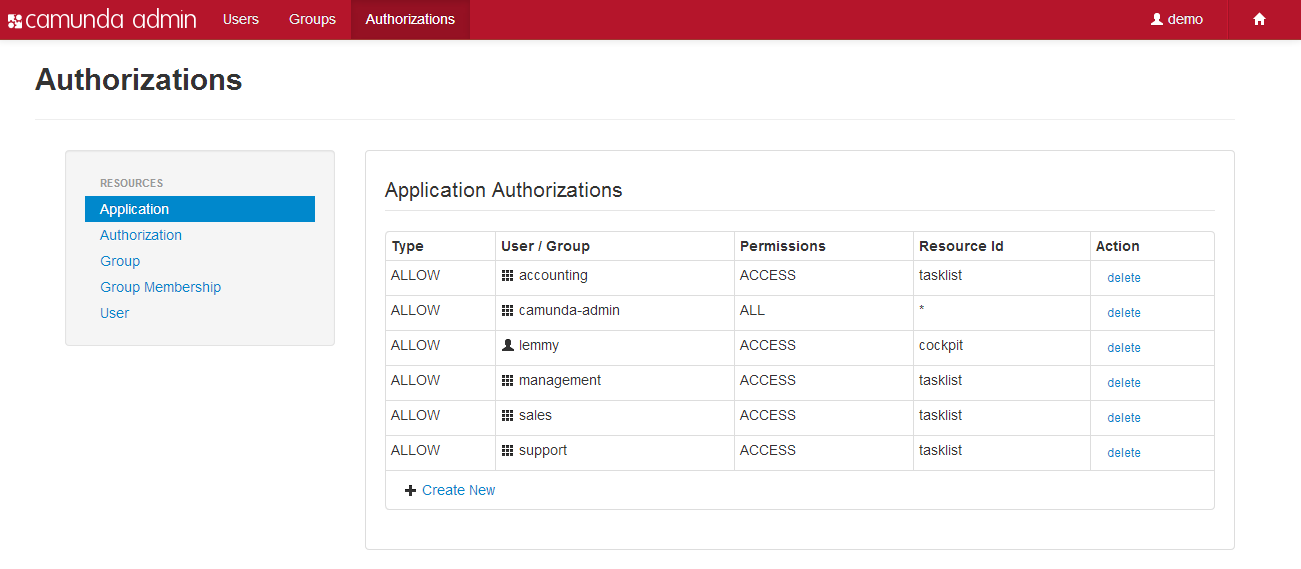

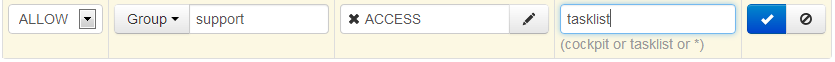

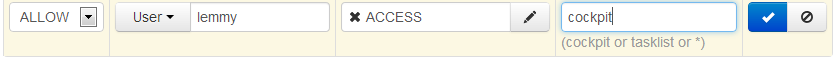

- Camunda Admin A web application for user management that allows you to manage users, groups and authorizations.

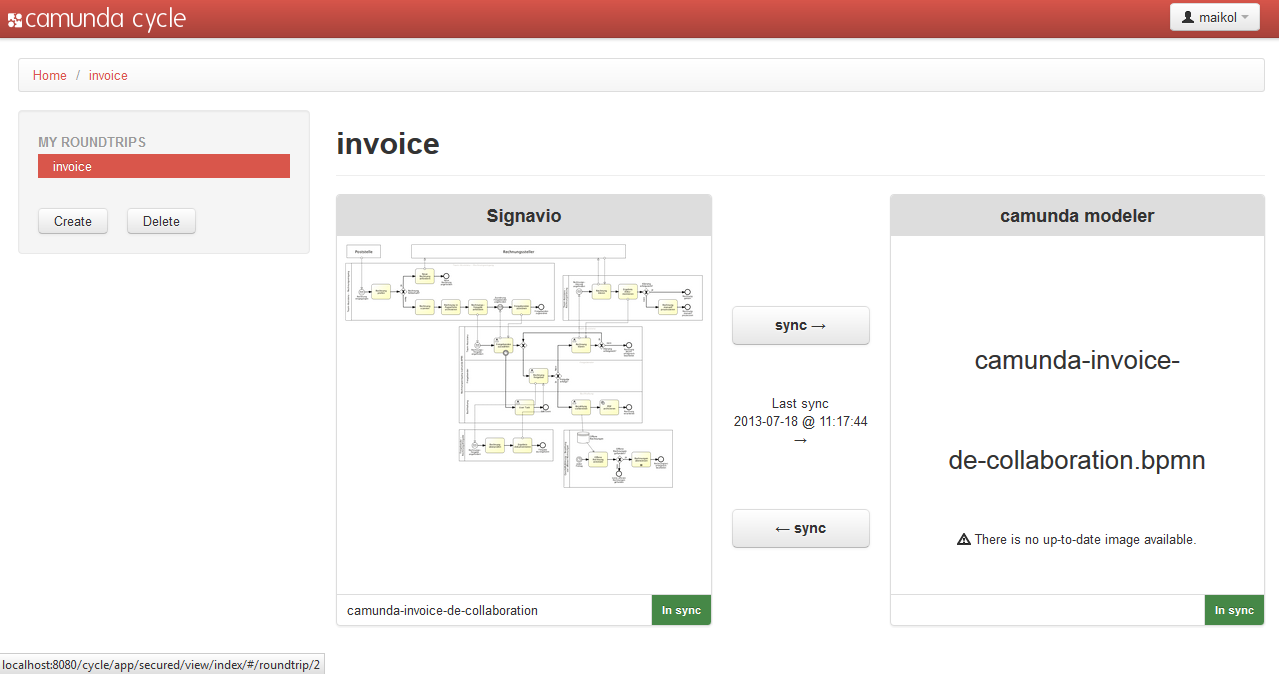

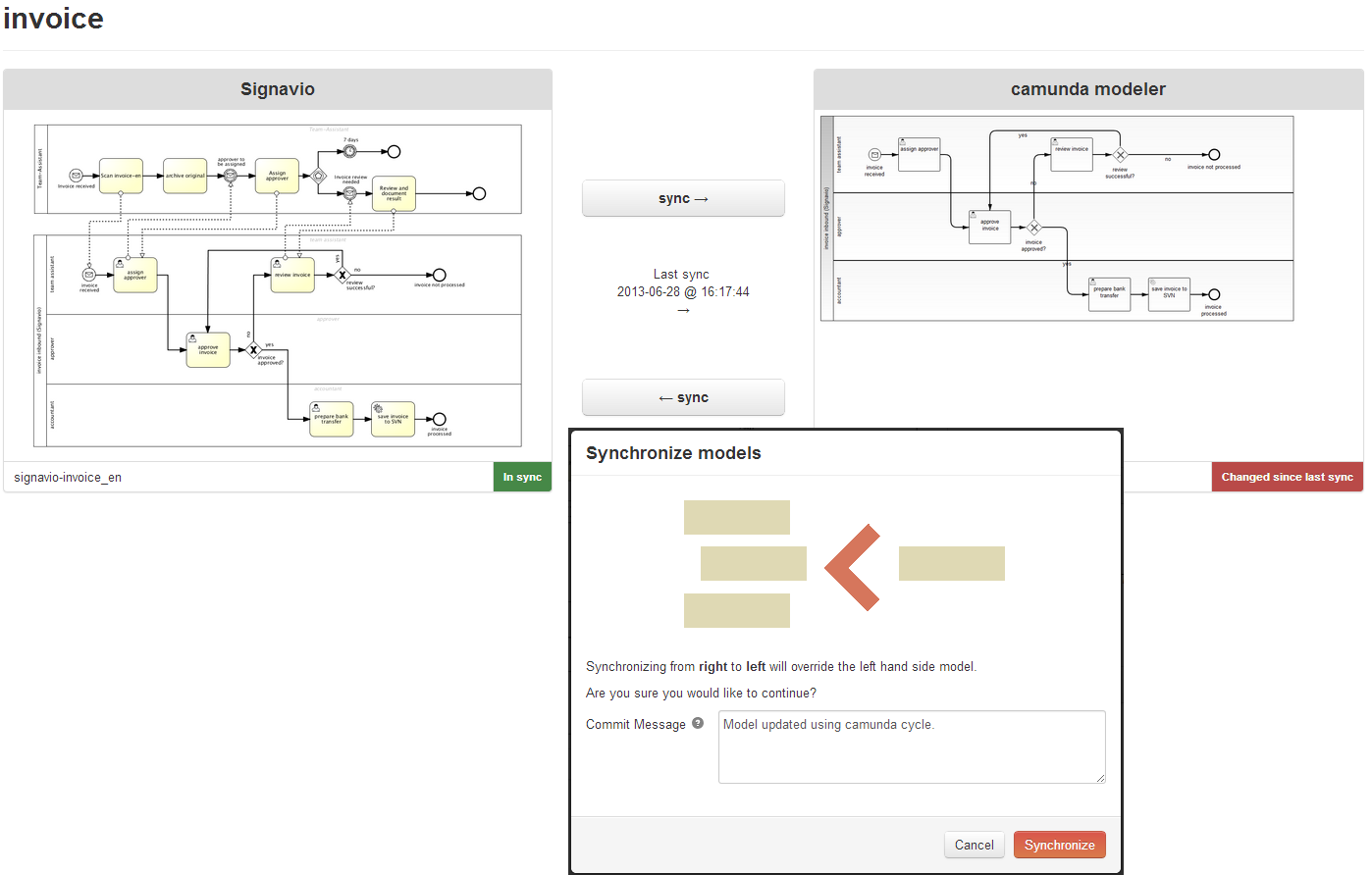

- Camunda Cycle A web application for synchronizing BPMN 2.0 process models between different modeling tools and modelers.

Additional Tools

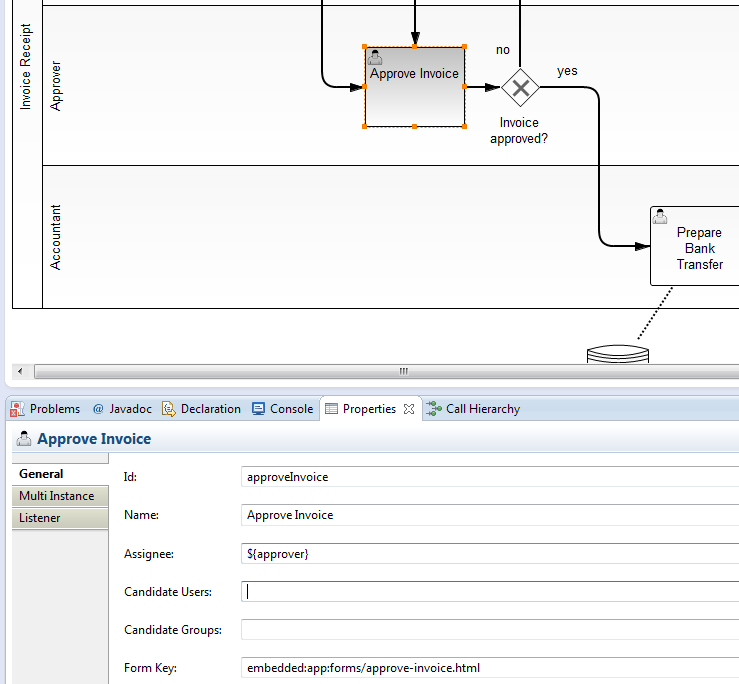

- Camunda Modeler: Eclipse plugin for process modeling.

- camunda-bpmn.js: JavaScript framework for parsing, rendering and executing BPMN 2.0 from an XML source.

Download

Download the Runtime

Camunda is a flexible framework which can be used in different contexts. See Architecture Overview for more details. Based on how you want to use camunda, you can choose a different distribution.

Community vs. Enterprise Edition

Camunda provides separate runtime downloads for community users and enterprise subscription customers:

Full Distribution

Download the full distribution if you want to use a shared process engine or if you want to get to know camunda quickly, without any additional setup or installation steps required*.

The full distribution bundles

- Process Engine configured as shared process engine,

- Runtime Web Applications (Tasklist, Cockpit, Admin),

- Rest Api,

- Container / Application Server itself*.

* Note that if you download the full distribution for an open source application server/container, the container itself is included. For example, if you download the tomcat distribution, tomcat itself is included and the camunda binaries (process engine and webapplications) are pre-installed into the container. This is not true for the the Oracle Weblogic and IBM Websphere downloads. These downloads do not include the application servers themselves.

See Installation Guide for additional details.

Standalone Web Application Distribution

Download the standalone web application distribution if you want to use Cockpit, Tasklist, Admin applications as a self-contained WAR file with an embedded process engine.

The standalone web application distribution bundles

- Process engine configured as embedded process engine,

- Runtime Web Applications (Tasklist, Cockpit, Admin),

- Rest Api,

The standalone web application can be deployed to any of the supported application servers.

The Process engine configuration is based on the Spring Framework. If you want to change the

database configuration, edit the WEB_INF/applicationContext.xml file inside the WAR file.

See Installation Guide for additional details.

Download Camunda Modeler

Camunda Modeler is an Eclipse based modeling Tool for BPMN 2.0. Camunda Modeler can be downloaded from the community download page.

Getting Started

The getting started tutorials can be found at http://docs.camunda.org/guides/getting-started-guides/.

Architecture Overview

camunda BPM is a Java-based framework. The main components are written in Java and we have a general focus on providing Java developers with the tools they need for designing, implementing and running business processes and workflows on the JVM. Nevertheless, we also want to make the process engine technology available to Non-Java developers. This is why camunda BPM also provides a REST API which allows you to build applications connecting to a remote process engine.

camunda BPM can be used both as a standalone process engine server or embedded inside custom Java applications. The embeddability requirement is at the heart of many architecture decisions within camunda BPM. For instance, we work hard to make the process engine component a lightweight component with as little dependencies on third-party libraries as possible. Furthermore, the embeddability motivates programming model choices such as the capabilities of the process engine to participate in Spring Managed or JTA transactions and the threading model.

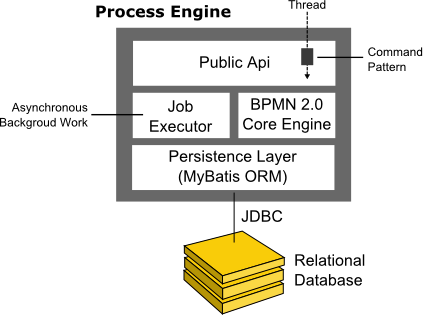

Process Engine Architecture

- Process Engine Public API: Service-oriented API allowing Java applications to interact with the process engine. The different responsibilities of the process engine (ie. Process Repository, Runtime Process Interaction, Task Management, ...) are separated into individual services. The public API features a command-style access pattern: Threads entering the process engine are routed through a Command Interceptor which is used for setting up Thread Context such as Transactions.

- BPMN 2.0 Core Engine: this is the core of the process engine. It features a lightweight execution engine for graph structures (PVM - Process Virtual Machine), a BPMN 2.0 parser which transforms BPMN 2.0 Xml files into Java Objects and a set of BPMN Behavior implementations (providing the implementation for BPMN 2.0 constructs such as Gateways or Service Tasks).

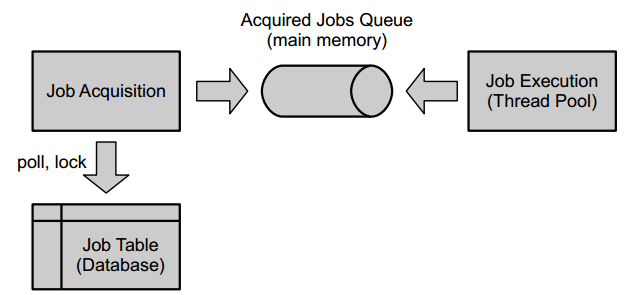

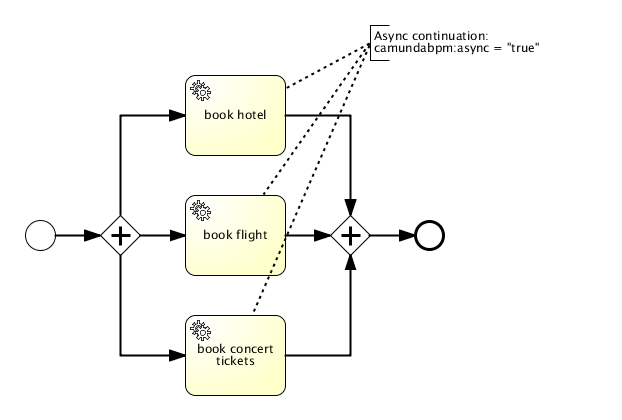

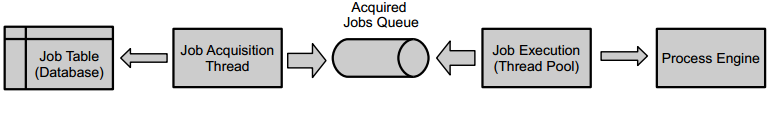

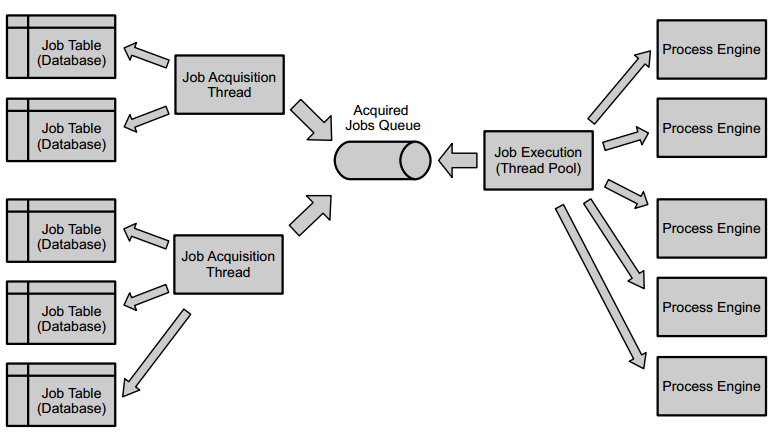

- Job Executor: the Job Executor is responsible for processing asynchronous background work such as Timers or asynchronous continuations in a process.

- The Persistence Layer: the process engine features a persistence layer responsible for persisting process instance state to a relational database. We use the MyBatis mapping engine for object relational mapping.

Required third-party libraries

See section on third-party libraries.

camunda BPM platform architecture

camunda BPM platform is a flexible framework which can be deployed in different scenarios. This section provides an overview over the most common deployment scenarios.

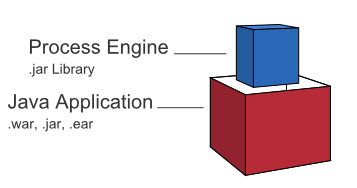

Embedded Process Engine

In this case the process engine is added as an application library to a custom application. This way the process engine can easily be started and stopped with the application lifecycle. It is possible to run multiple embedded process engines on top of a shared database.

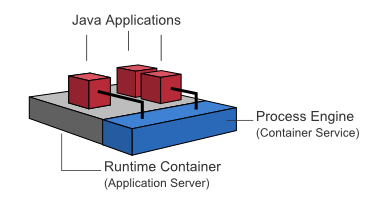

Shared, container-managed Process Engine

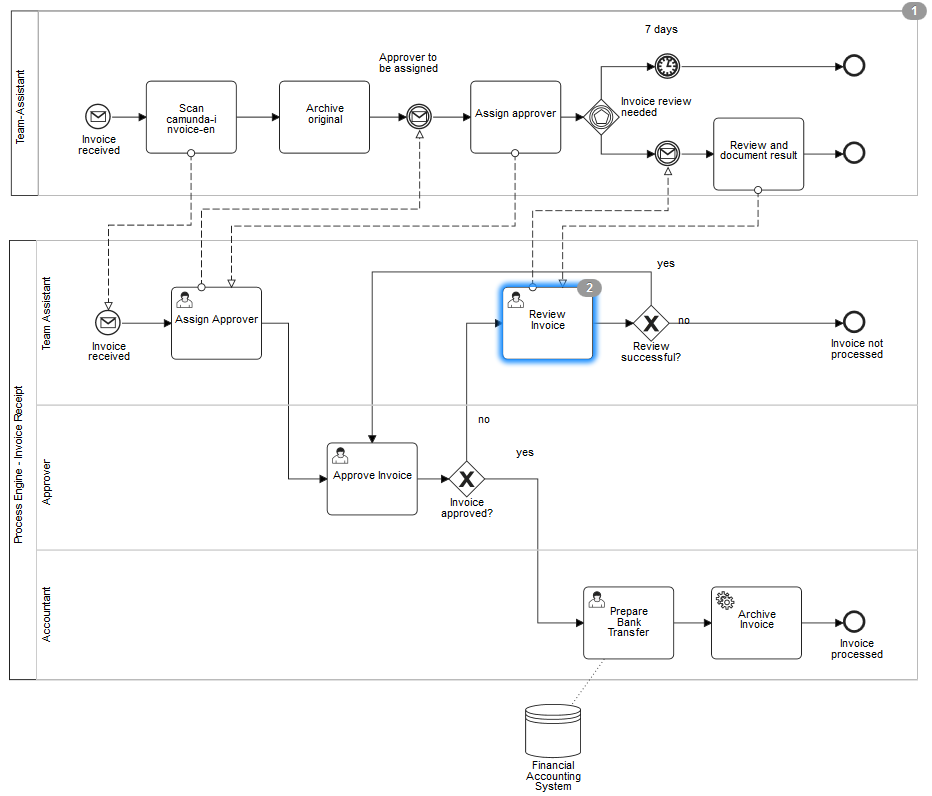

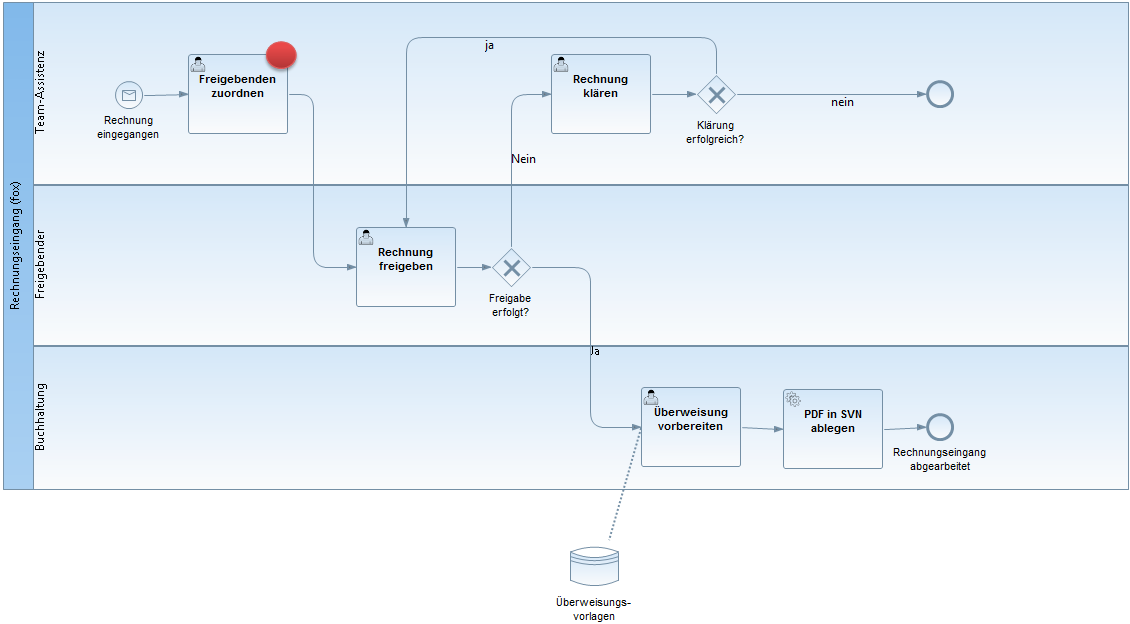

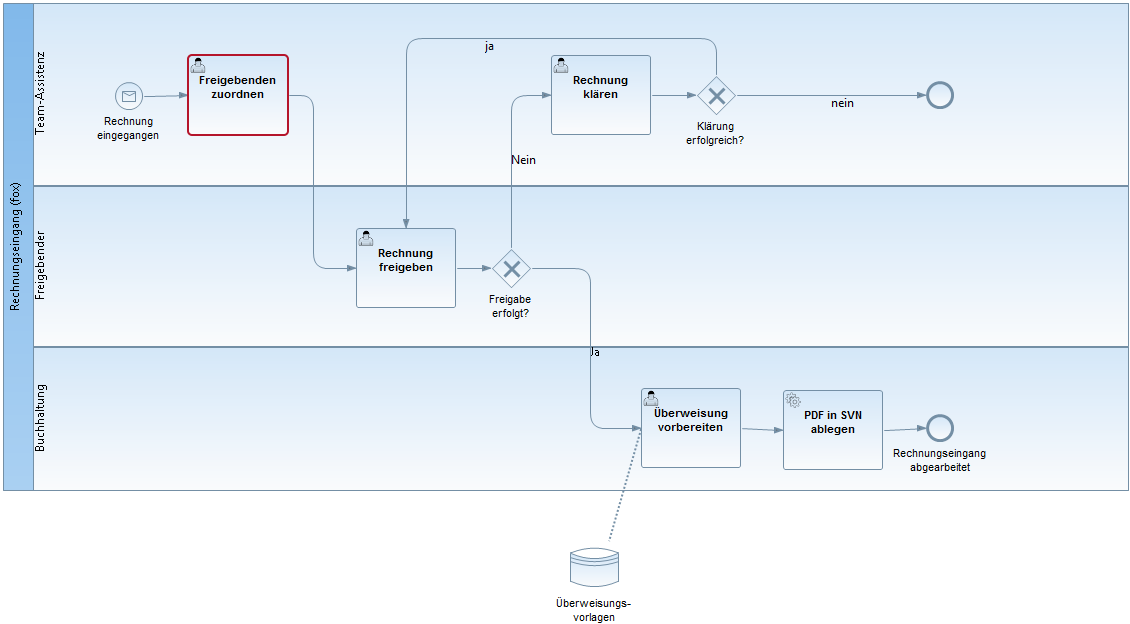

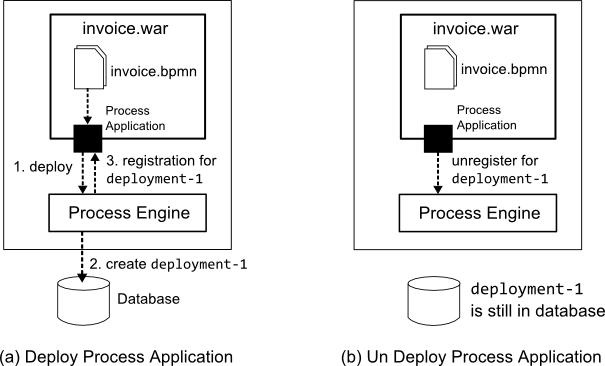

In this case the process engine is started inside the runtime container (Servlet Container, Application Server, ...). The process engine is provided as a container service and can be shared by all applications deployed inside the container. The concept can be compared to a JMS Message Queue which is provided by the runtime and can be used by all applications. There is a one-to-one mapping between process deployments and applications: the process engine keeps track of the process definitions deployed by an application and delegates execution to the application in question.

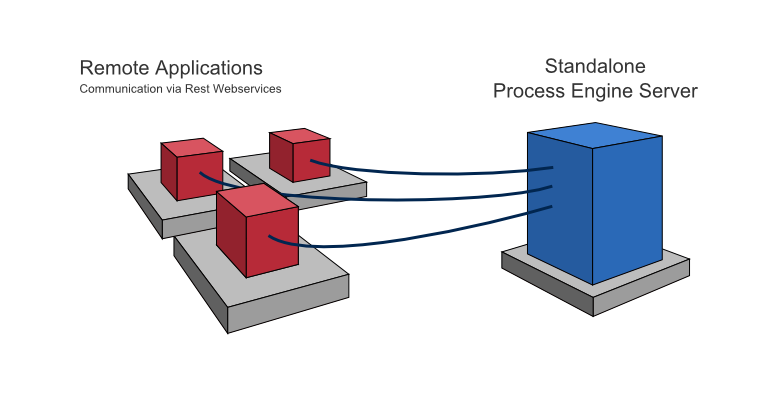

Standalone (Remote) Process Engine Server

In this case the process engine is provided as a network service. Different applications running on the network can interact with the process engine through a remote communication channel. The easiest way for making the process engine accessible remotely is to use the built-in REST api. Different communication channels such as SOAP Webservices or JMS are possible but need to be implemented by users.

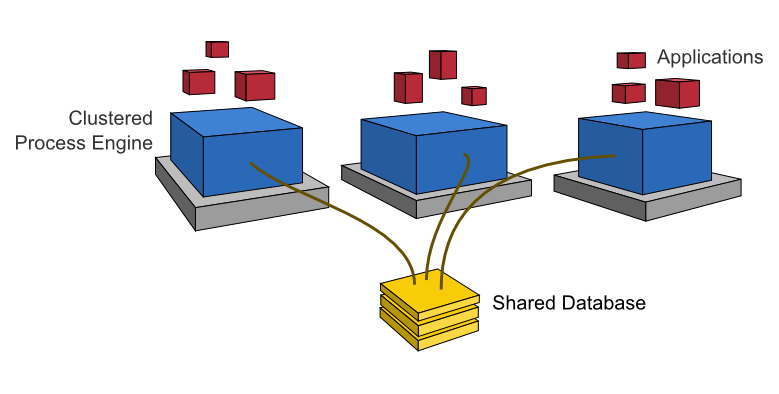

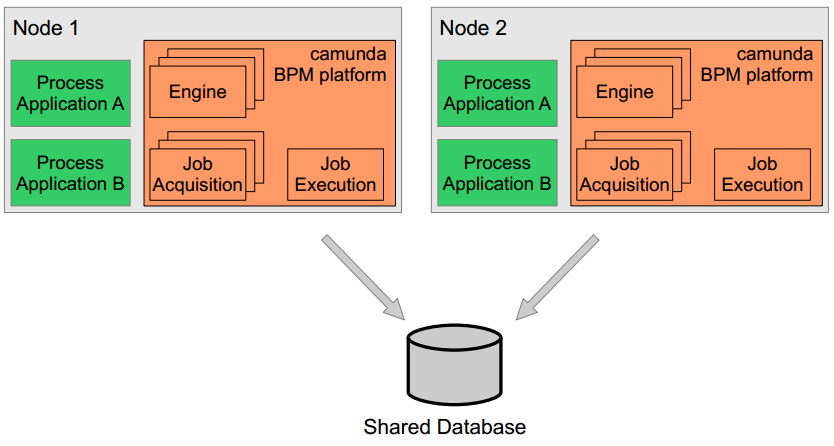

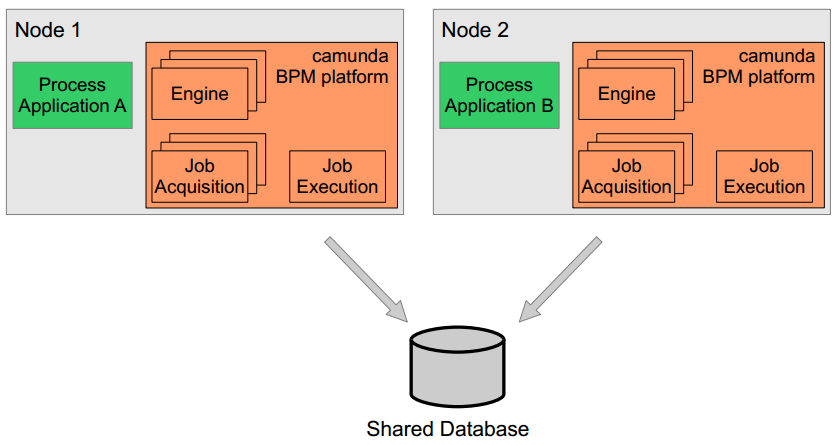

Clustering Model

In order to provide scale-up or fail-over capabilities, the process engine can be distributed to different nodes in a cluster. Each process engine instance must then connect to a shared database.

The individual process engine instances do not maintain session state across transactions. Whenever the process engine runs a transaction, the complete state is flushed out to the shared database. This makes it possible to route subsequent requests which do work in the same process instance to different cluster nodes. This model is very simple and easy to understand and imposes limited restrictions when it comes to deploying a cluster installation. As far as the process engine is concerned there is also no difference between setups for scale-up and setups for fail-over (as the process engine keeps no session state between transactions).

The process engine job executor is also clustered and runs on each node. This way, there is no single point of failure as far as the process engine is concerned. The job executor can run in both homogeneous and heterogeneous clusters.

Multi-Tenancy Model

To serve multiple, independent parties with one Camunda installation, the process engine supports multi-tenancy. The following multi tenancy models are supported:

- Table-level data separation by using different database schemas or databases,

- Row-level data separation by using a tenant marker.

Users should choose the model which fits their data separation needs. Camunda's APIs provide access to processes and related data specific to each tenant. More details can be found in the multi-tenancy section.

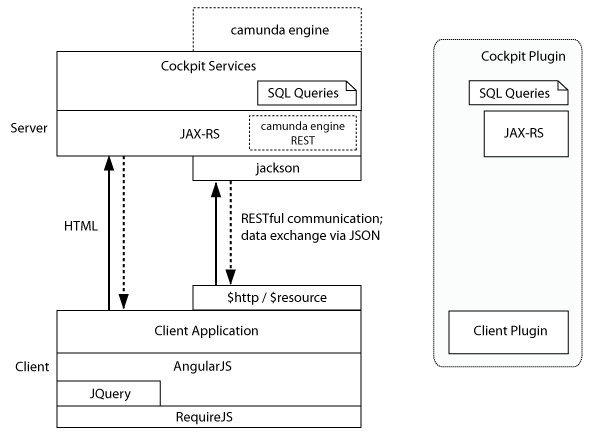

Web Application Architecture

The camunda BPM web applications are based on a RESTful architecture.

Frameworks used:

- JAX-RS based Rest API

- AngularJS

- RequireJS

- jQuery

- Twitter Bootstrap

Additional custom frameworks developed by camunda hackers:

- camunda-bpmn.js: camunda BPMN 2.0 JavaScript libraries

- ngDefine: integration of AngularJS into RequireJS powered applications

- angular-data-depend: toolkit for implementing complex, data heavy AngularJS applications

Supported Environments for Version 7.1

You can run the camunda BPM platform in every Java-runnable environment. camunda BPM is supported with our QA infrastructure in the following environments. Here you can find more information about our enterprise support.

Please find the supported environments for version 7.0 here.

Container / Application Server

- Apache Tomcat 6 / 7

- JBoss Application Server 7.2 and JBoss EAP 6.1 / 6.2

- GlassFish 3.1

- IBM WebSphere Application Server 8.0 / 8.5 (Enterprise Edition only)

- Oracle WebLogic Server 12c - excluding camunda Cycle (Enterprise Edition only)

Databases

- MySQL 5.1

- Oracle 10g / 11g

- IBM DB2 9.7

- PostgreSQL 9.1

- Microsoft SQL Server 2008 R2 / 2012 (see Configuration Note)

- H2 1.3

Webbrowser

- Google Chrome latest

- Mozilla Firefox latest

- Internet Explorer 9 / 10

Java

- Java 6 / 7

Java Runtime

- Sun / Oracle Hot Spot 6 / 7

- IBM® J9 virtual machine (JVM) 6 / 7

- Oracle JRockit 6 - R28.2.7

Eclipse (for camunda modeler)

- Eclipse Indigo / Juno / Kepler

Community Extensions

Camunda BPM is developed by Camunda as an open source project in collaboration with the community. The "core project" (namely "Camunda BPM platform") is the basis for the Camunda BPM product which is provided by Camunda as a commercial offering. The commercial Camunda BPM product contains additional (non-open source) features and is provided to Camunda BPM customers with service offerings such as enterprise support and bug fix releases.

camunda supports the community in its effort to build additional community extensions under the camunda BPM umbrella. Such community extensions are maintained by the community and are not part of the commercial camunda BPM product. camunda does not support community extensions as part of its commercial services to enterprise subscription customers.

List of Community Extensions

The following is a list of current (unsupported) community extensions:

- Apache Camel Integration

- AssertJ Testing Library

- Grails Plugin

- Needle Testing Library

- OSGi Integration

- Elastic Search Extension

- PHP SDK

Building a Community Extension

Do you have a great idea around open source BPM you want to share with the world? Awesome! camunda will support you in building your own community extension. Have a look at our contribution guidelines to find out how to propose a community project.

Third-Party Libraries

In the following section all third-party libraries are listed on which components of the camunda platform depend.

Process Engine

The process engine depends on the following third-party libraries:

- MyBatis mapping framework (Apache License 2.0) for object-relational mapping.

- Joda Time (Apache License 2.0) for parsing date formats.

- Java Uuid Generator (JUG) (Apache License 2.0) Id Generator. See documentation on Id-Generators.

Additional optional dependencies:

- Apache Commons Email (Apache License 2.0) for mail task support.

- Spring Framework Spring-Beans (Apache License 2.0) for configuration using camunda.cfg.xml.

- Spring Framework Spring-Core (Apache License 2.0) for configuration using camunda.cfg.xml.

- Spring Framework Spring-ASM (Apache License 3.0) for configuration using camunda.cfg.xml.

- Groovy (Apache License 2.0) for groovy script task support.

REST API

The REST API depends on the following third-party libraries:

- Jackson JAX-RS provider for JSON content type (Apache License 2.0)

- Apache Commons FileUpload (Apache License 2.0)

Additional optional dependencies:

- RESTEasy (Apache License 2.0) on Apache Tomcat only.

Spring Support

The Spring support depends on the following third-party libraries:

- Apache Commons DBCP (Apache License 2.0)

- Apache Commons Lang (Apache License 2.0)

- Spring Framework Spring-Beans (Apache License 2.0)

- Spring Framework Spring-Core (Apache License 2.0)

- Spring Framework Spring-ASM (Apache License 2.0)

- Spring Framework Spring-Context (Apache License 2.0)

- Spring Framework Spring-JDBC (Apache License 2.0)

- Spring Framework Spring-ORM (Apache License 2.0)

- Spring Framework Spring-TX (Apache License 2.0)

camunda Wepapp

The camunda Webapp (Cockpit, Tasklist, Admin) depends on the following third-party libraries:

- AngularJS (MIT)

- AngularUI (MIT)

- Better DOM (MIT)

- Better Placeholder Polyfill (MIT)

- Twitter Bootstrap (Apache License 2.0)

- Bootstrap Slider (Apache License 2.0)

- Dojo (Academic Free License 2.1)

- jQuery (MIT)

- jQuery Mousewheel (MIT)

- jQuery Overscroll Fixed (MIT)

- jQuery UI (MIT)

- RequireJS (MIT)

camunda Cycle

Cycle depends on the following third-party libraries:

Javascript dependencies:

Java dependencies:

- Apache Commons Codec (Apache License 2.0)

- NekoHTML (Apache License 2.0)

- SAXON (Mozilla Public License 1.0)

- Apache Commons Virtual File System (Apache License 2.0)

- AspectJ runtime (Eclipse Public License 1.0)

- AspectJ weaver (Eclipse Public License 1.0)

- Jasypt (Apache License 2.0)

- SLF4J JCL (MIT)

- Spring Framework Spring-AOP (Apache License 2.0)

- Spring Framework Spring-ORM (Apache License 2.0)

- Spring Framework Spring-Web (Apache License 2.0)

- Thymeleaf (Apache License 2.0)

- Thymeleaf-Spring3 (Apache License 2.0)

- Tigris SVN Client Adapter (Apache License 2.0)

- SVNKit (TMate Open Source License)

- SVNKit JavaHL (TMate Open Source License)

- Gettext Commons (Apache License 2.0)

camunda Modeler

The camunda Modeler depends on the following third-party libraries:

- Eclipse Modeling Framework Project (EMF) (Eclipse Public License 1.0)

- Eclipse OSGi (Eclipse Public License 1.0)

- Eclipse Graphiti (Eclipse Public License 1.0)

- Eclipse Graphical Editing Framework (GEF) (Eclipse Public License 1.0)

- Eclipse XSD (Eclipse Public License 1.0)

- Eclipse UI (Eclipse Public License 1.0)

- Eclipse Core (Eclipse Public License 1.0)

- Eclipse Java development tools (JDT) (Eclipse Public License 1.0)

- Eclipse JFace (Eclipse Public License 1.0)

- Eclipse BPMN2 (Eclipse Public License 1.0)

- Eclipse WST (Eclipse Public License 1.0)

- Apache Xerces (Eclipse Public License 1.0)

- Eclipse WST SSE UI (Eclipse Public License 1.0)

Public API

The camunda platform provides a public API. This section covers the definition of the public API and backwards compatibility for version updates.

Definition of Public API

camunda BPM public API is limited to the following items:

Java API:

camunda-engine: all non implementation Java packages (package name does not containimpl)camunda-engine-spring: all non implementation Java packages (package name does not containimpl)camunda-engine-cdi: all non implementation Java packages (package name does not containimpl)

HTTP API (REST API):

camunda-engine-rest: HTTP interface (set of HTTP requests accepted by the REST API as documented in REST API reference). Java classes are not part of the public API.

Backwards Compatibility for Public API

The camunda versioning scheme follows the MAJOR.MINOR.PATCH pattern put forward by Semantic Versioning. camunda will maintain public API backwards compatibility for MINOR version updates. Example: Update from version 7.1.x to 7.2.x will not break the public API.

Process Engine

Process Engine Bootstrapping

You have a number of options to configure and create a process engine depending on whether you use a application managed or a shared, container managed process engine.

Application Managed Process Engine

You manage the process engine as part of your application. The following ways exist to configure it:

Shared, Container Managed Process Engine

A container of your choice (e.g. Tomcat, JBoss, GlassFish or WebSphere) manages the process engine for you. The configuration is carried out in a container specific way, see Runtime Container Integration for details.

ProcessEngineConfiguration bean

The camunda engine uses the ProcessEngineConfiguration bean to configure and construct a standalone Process Engine. There are multiple subclasses available that can be used to define the process engine configuration. These classes represent different environments, and set defaults accordingly. It's a best practice to select the class the matches (the most) your environment, to minimize the number of properties needed to configure the engine. The following classes are currently available:

org.camunda.bpm.engine.impl.cfg.StandaloneProcessEngineConfigurationThe process engine is used in a standalone way. The engine itself will take care of the transactions. By default, the database will only be checked when the engine boots (and an exception is thrown if there is no database schema or the schema version is incorrect).org.camunda.bpm.engine.impl.cfg.StandaloneInMemProcessEngineConfigurationThis is a convenience class for unit testing purposes. The engine itself will take care of the transactions. An H2 in-memory database is used by default. The database will be created and dropped when the engine boots and shuts down. When using this, probably no additional configuration is needed (except when using for example the job executor or mail capabilities).org.camunda.bpm.engine.spring.SpringProcessEngineConfigurationTo be used when the process engine is used in a Spring environment. See the Spring integration section for more information.org.camunda.bpm.engine.impl.cfg.JtaProcessEngineConfigurationTo be used when the engine runs in standalone mode, with JTA transactions.

Bootstrap a Process Engine using Java API

You can configure the process engine programmatically by creating the right ProcessEngineConfiguration object or use some pre-defined one:

ProcessEngineConfiguration.createStandaloneProcessEngineConfiguration();

ProcessEngineConfiguration.createStandaloneInMemProcessEngineConfiguration();Now you can call the buildProcessEngine() operation to create a Process Engine:

ProcessEngine processEngine = ProcessEngineConfiguration.createStandaloneInMemProcessEngineConfiguration()

.setDatabaseSchemaUpdate(ProcessEngineConfiguration.DB_SCHEMA_UPDATE_FALSE)

.setJdbcUrl("jdbc:h2:mem:my-own-db;DB_CLOSE_DELAY=1000")

.setJobExecutorActivate(true)

.buildProcessEngine();Configure Process Engine using Spring XML

The easiest way to configure your Process Engine is via through an XML file called camunda.cfg.xml. Using that you can simply do:

ProcessEngine processEngine = ProcessEngines.getDefaultProcessEngine()The camunda.cfg.xml must contain a bean that has the id processEngineConfiguration, select the best fitting ProcessEngineConfiguration class suiting your needs:

<bean id="processEngineConfiguration" class="org.camunda.bpm.engine.impl.cfg.StandaloneProcessEngineConfiguration">This will look for an camunda.cfg.xml file on the classpath and construct an engine based on the configuration in that file. The following snippet shows an example configuration:

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans.xsd">

<bean id="processEngineConfiguration" class="org.camunda.bpm.engine.impl.cfg.StandaloneProcessEngineConfiguration">

<property name="jdbcUrl" value="jdbc:h2:mem:camunda;DB_CLOSE_DELAY=1000" />

<property name="jdbcDriver" value="org.h2.Driver" />

<property name="jdbcUsername" value="sa" />

<property name="jdbcPassword" value="" />

<property name="databaseSchemaUpdate" value="true" />

<property name="jobExecutorActivate" value="false" />

<property name="mailServerHost" value="mail.my-corp.com" />

<property name="mailServerPort" value="5025" />

</bean>

</beans>Note that the configuration XML is in fact a Spring configuration. This does not mean that the camunda engine can only be used in a Spring environment! We are simply leveraging the parsing and dependency injection capabilities of Spring internally for building up the engine.

The ProcessEngineConfiguration object can also be created programmatically using the configuration file. It is also possible to use a different bean id:

ProcessEngineConfiguration.createProcessEngineConfigurationFromResourceDefault();

ProcessEngineConfiguration.createProcessEngineConfigurationFromResource(String resource);

ProcessEngineConfiguration.createProcessEngineConfigurationFromResource(String resource, String beanName);

ProcessEngineConfiguration.createProcessEngineConfigurationFromInputStream(InputStream inputStream);

ProcessEngineConfiguration.createProcessEngineConfigurationFromInputStream(InputStream inputStream, String beanName);It is also possible not to use a configuration file, and create a configuration based on defaults (see the different supported classes for more information).

ProcessEngineConfiguration.createStandaloneProcessEngineConfiguration();

ProcessEngineConfiguration.createStandaloneInMemProcessEngineConfiguration();All these ProcessEngineConfiguration.createXXX() methods return a ProcessEngineConfiguration that can further be tweaked if needed. After calling the buildProcessEngine() operation, a ProcessEngine is created as explained above.

Configure Process Engine in bpm-platform.xml

The bpm-platform.xml file is used to configure camunda BPM platform in the following distributions:

- Apache Tomcat

- GlassFish Application Server

- IBM WebSphere Application Server

The <process-engine ... /> xml tag allows defining a process engine:

<?xml version="1.0" encoding="UTF-8"?>

<bpm-platform xmlns="http://www.camunda.org/schema/1.0/BpmPlatform"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.camunda.org/schema/1.0/BpmPlatform http://www.camunda.org/schema/1.0/BpmPlatform">

<job-executor>

<job-acquisition name="default" />

</job-executor>

<process-engine name="default">

<job-acquisition>default</job-acquisition>

<configuration>org.camunda.bpm.engine.impl.cfg.StandaloneProcessEngineConfiguration</configuration>

<datasource>java:jdbc/ProcessEngine</datasource>

<properties>

<property name="history">full</property>

<property name="databaseSchemaUpdate">true</property>

<property name="authorizationEnabled">true</property>

</properties>

</process-engine>

</bpm-platform>See Deployment Descriptor Reference for complete documentation of the syntax of the bpm-platform.xml file.

Configure Process Engine in processes.xml

The process engine can also be configured and bootstrapped using the META-INF/processes.xml file. See Section on processes.xml file for details.

See Deployment Descriptor Reference for complete documentation of the syntax of the processes.xml file.

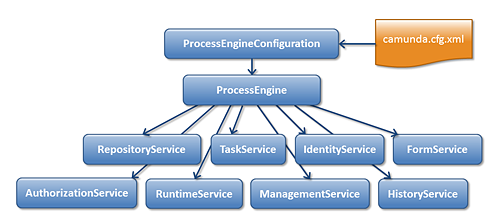

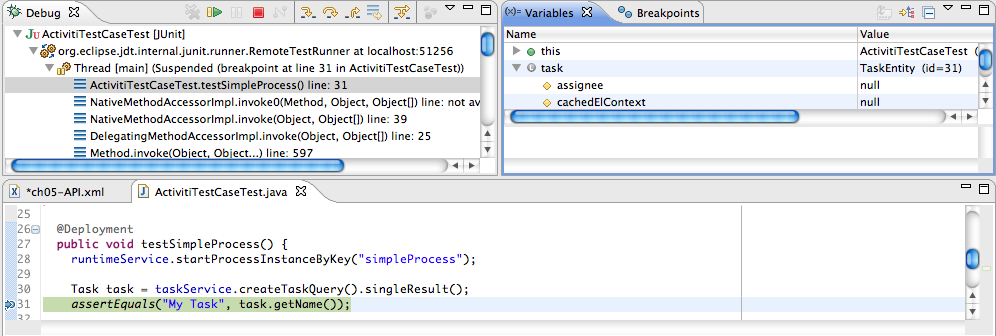

Process Engine API

Services API

The Java API is the most common way of interacting with the engine. The central starting point is the ProcessEngine, which can be created in several ways as described in the configuration section. From the ProcessEngine, you can obtain the various services that contain the workflow/BPM methods. ProcessEngine and the services objects are thread safe. So you can keep a reference to 1 of those for a whole server.

ProcessEngine processEngine = ProcessEngines.getDefaultProcessEngine();

RuntimeService runtimeService = processEngine.getRuntimeService();

RepositoryService repositoryService = processEngine.getRepositoryService();

TaskService taskService = processEngine.getTaskService();

ManagementService managementService = processEngine.getManagementService();

IdentityService identityService = processEngine.getIdentityService();

HistoryService historyService = processEngine.getHistoryService();

FormService formService = processEngine.getFormService();ProcessEngines.getDefaultProcessEngine() will initialize and build a process engine the first time it is called and afterwards always return the same process engine. Proper creation and closing of all process engines can be done with ProcessEngines.init() and ProcessEngines.destroy().

The ProcessEngines class will scan for all camunda.cfg.xml and activiti-context.xml files. For all camunda.cfg.xml files, the process engine will be built in the typical way: ProcessEngineConfiguration.createProcessEngineConfigurationFromInputStream(inputStream).buildProcessEngine(). For all activiti-context.xml files, the process engine will be built in the Spring way: First the Spring application context is created and then the process engine is obtained from that application context.

All services are stateless. This means that you can easily run camunda BPM on multiple nodes in a cluster, each going to the same database, without having to worry about which machine actually executed previous calls. Any call to any service is idempotent regardless of where it is executed.

The RepositoryService is probably the first service needed when working with the camunda engine. This service offers operations for managing and manipulating deployments and process definitions. Without going into much detail here, a process definition is a Java counterpart of BPMN 2.0 process. It is a representation of the structure and behavior of each of the steps of a process. A deployment is the unit of packaging within the engine. A deployment can contain multiple BPMN 2.0 xml files and any other resource. The choice of what is included in one deployment is up to the developer. It can range from a single process BPMN 2.0 xml file to a whole package of processes and relevant resources (for example the deployment 'hr-processes' could contain everything related to hr processes). The RepositoryService allows to deploy such packages. Deploying a deployment means it is uploaded to the engine, where all processes are inspected and parsed before being stored in the database. From that point on, the deployment is known to the system and any process included in the deployment can now be started.

Furthermore, this service allows to

- Query on deployments and process definitions known to the engine.

- Suspend and activate process definitions. Suspending means no further operations can be done on them, while activation is the opposite operation.

- Retrieve various resources such as files contained within the deployment or process diagrams that were auto generated by the engine.

While the RepositoryService is rather about static information (ie. data that doesn't change, or at least not a lot), the RuntimeService is quite the opposite. It deals with starting new process instances of process definitions. As said above, a process definition defines the structure and behavior of the different steps in a process. A process instance is one execution of such a process definition. For each process definition there typically are many instances running at the same time. The RuntimeService also is the service which is used to retrieve and store process variables. This is data which is specific to the given process instance and can be used by various constructs in the process (eg. an exclusive gateway often uses process variables to determine which path is chosen to continue the process). The RuntimeService also allows to query on process instances and executions. Executions are a representation of the 'token' concept of BPMN 2.0. Basically an execution is a pointer pointing to where the process instance currently is. Lastly, the RuntimeService is used whenever a process instance is waiting for an external trigger and the process needs to be continued. A process instance can have various wait states and this service contains various operations to 'signal' the instance that the external trigger is received and the process instance can be continued.

Tasks that need to be performed by actual human users of the system are core to the process engine. Everything around tasks is grouped in the TaskService, such as

- Querying tasks assigned to users or groups.

- Creating new standalone tasks. These are tasks that are not related to a process instances.

- Manipulating to which user a task is assigned or which users are in some way involved with the task.

- Claiming and completing a task. Claiming means that someone decided to be the assignee for the task, meaning that this user will complete the task. Completing means 'doing the work of the tasks'. Typically this is filling in a form of sorts.

The IdentityService is pretty simple. It allows the management (creation, update, deletion, querying, ...) of groups and users. It is important to understand that the core engine actually doesn't do any checking on users at runtime. For example, a task could be assigned to any user, but the engine does not verify if that user is known to the system. This is because the engine can also used in conjunction with services such as LDAP, active directory, etc.

The FormService is an optional service. Meaning that the camunda engine can perfectly be used without it, without sacrificing any functionality. This service introduces the concept of a start form and a task form. A start form is a form that is shown to the user before the process instance is started, while a task form is the form that is displayed when a user wants to complete a form. You can define these forms in the BPMN 2.0 process definition. This service exposes this data in an easy way to work with. But again, this is optional as forms don't need to be embedded in the process definition.

The HistoryService exposes all historical data gathered by the engine. When executing processes, a lot of data can be kept by the engine (this is configurable) such as process instance start times, who did which tasks, how long it took to complete the tasks, which path was followed in each process instance, etc. This service exposes mainly query capabilities to access this data.

The ManagementService is typically not needed when coding custom application. It allows to retrieve information about the database tables and table metadata. Furthermore, it exposes query capabilities and management operations for jobs. Jobs are used in the engine for various things such as timers, asynchronous continuations, delayed suspension/activation, etc. Later on, these topics will be discussed in more detail.

For more detailed information on the service operations and the engine API, see the Javadocs.

Query API

To query data from the engine is possible in multiple ways:

- Java Query API: Fluent Java API to query engine entities (like ProcessInstances, Tasks, ...).

- REST Query API: REST API to query engine entities (like ProcessInstances, Tasks, ...).

- Native Queries: Provide own SQL queries to retrieve engine entities (like ProcessInstances, Tasks, ...) if the Query API lacks possibilities you need (e.g. OR conditions).

- Custom Queries: Use completely custom queries and an own MyBatis mapping to retrieve own value objects or join engine with domain data.

- SQL Queries: Use database SQL queries for use cases like Reporting.

The recommended way is to use on of the Query APIs.

The Java Query API allows to program completely typesafe queries with a fluent API. You can add various conditions to your queries (all of which are applied together as a logical AND) and precisely one ordering. The following code shows an example:

List<Task> tasks = taskService.createTaskQuery()

.taskAssignee("kermit")

.processVariableValueEquals("orderId", "0815")

.orderByDueDate().asc()

.list();You can find more information on this in the Javadocs.

REST Query API

The Java Query API is exposed as REST service as well, see REST documentation for details.

Native Queries

Sometimes you need more powerful queries, e.g. queries using an OR operator or restrictions you can not express using the Query API. For these cases, we introduced native queries, which allow you to write your own SQL queries. The return type is defined by the Query object you use and the data is mapped into the correct objects, e.g. Task, ProcessInstance, Execution, etc. Since the query will be fired at the database you have to use table and column names as they are defined in the database, this requires some knowledge about the internal data structure and it is recommended to use native queries with care. The table names can be retrieved via the API to keep the dependency as small as possible.

List<Task> tasks = taskService.createNativeTaskQuery()

.sql("SELECT count(*) FROM " + managementService.getTableName(Task.class) + " T WHERE T.NAME_ = #{taskName}")

.parameter("taskName", "aOpenTask")

.list();

long count = taskService.createNativeTaskQuery()

.sql("SELECT count(*) FROM " + managementService.getTableName(Task.class) + " T1, "

+ managementService.getTableName(VariableInstanceEntity.class) + " V1 WHERE V1.TASK_ID_ = T1.ID_")

.count();Custom Queries

For performance reasons it might sometimes be desirable not to query the engine objects but some own value or DTO objects collecting data from different tables - maybe including your own domain classes.

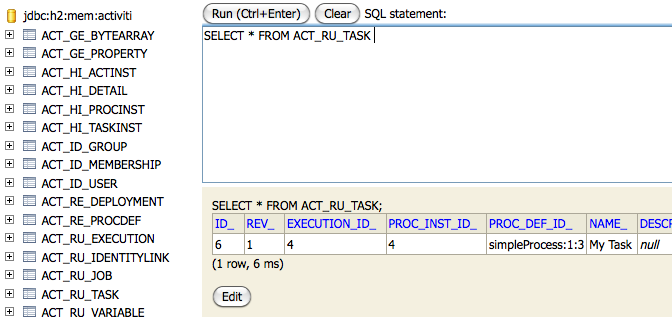

SQL Queries

The table layout is pretty straightforward - we concentrated on making it easy to understand. Hence it is OK to do SQL queries for e.g. reporting use cases. Just make sure that you do not mess up the engine data by updating the tables without exactly knowing what you are doing.

Process Engine Concepts

This section explains some core process engine concepts that are used in both the process engine API and the internal process engine implementation. Understanding these fundamentals makes it easier to use the process engine API.

Process Definitions

A process definition defines the structure of a process. You could say that the process definition is the process. camunda BPM uses BPMN 2.0 as its primary modeling language for modeling process definitions.

camunda BPM comes with two BPMN 2.0 References:

- The BPMN 2.0 Modeling Reference introduces the fundamentals of BPMN 2.0 and helps you to get started modeling processes. (Make sure to read the Tutorial as well.)

- The BPMN 2.0 Implementation Reference covers the implementation of the individual BPMN 2.0 constructs in camunda BPM. You should consult this reference if you want to implement and execute BPMN processes.

In camunda BPM you can deploy processes to the process engine in BPMN 2.0 XML format. The XML files are parsed and transformed into a process definition graph structure. This graph structure is executed by the process engine.

Querying for Process Definitions

You can query for all deployed process definitions using the Java API and the ProcessDefinitionQuery made available through the RepositoryService. Example:

List<ProcessDefinition> processDefinitions = repositoryService.createProcessDefinitionQuery()

.processDefinitionKey("invoice")

.orderByProcessDefinitionVersion()

.asc()

.list();The above query returns all deployed process definitions for the key invoice ordered by their version property.

You can also query for process definitions using the REST API.

Keys and Versions

The key of a process definition (invoice in the example above) is the logical identifier of the process. It is used throughout the API, most prominently for starting process instances (see section on process instances). The key of a process definition is defined using the id property of the corresponding <process ... > element in the BPMN 2.0 XML file:

<process id="invoice" name="invoice receipt" isExecutable="true">

...

</process>If you deploy multiple processes with the same key, they are treated as individual versions of the same process definition by the process engine.

Suspending Process Definitions

Suspending a process definition disables it temporarily in that it cannot be instantiated while it is suspended. The RuntimeService Java API can be used to suspend a process definition. Similarly, you can activate a process definition to undo this effect.

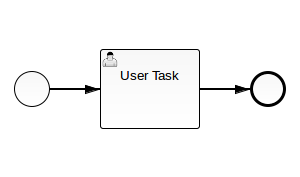

Process Instances

A process instance is an individual execution of a process definition. The relation of the process instance to the process definition is the same as the relation between Object and Class in Object Oriented Programming (the process instance playing the role of the object and the process definition playing the role of the class in this analogy).

The process engine is responsible for creating process instances and managing their state. If you start a process instance which contains a wait state, for example a user task, the process engine must make sure that the state of the process instance is captured and stored inside a database until the wait state is left (the user task is completed).

Starting a Process Instance

The simplest way to start a process instance is by using the startProcessInstanceByKey(...) method offered by the RuntimeService:

ProcessInstance instance = runtimeService.startProcessInstanceByKey("invoice");

You may optionally pass in a couple of variables:

Map<String, Object> variables = new HashMap<String,Object>();

variables.put("creditor", "Nice Pizza Inc.");

ProcessInstance instance = runtimeService.startProcessInstanceByKey("invoice", variables);

Process variables are available to all tasks in a process instance and are automatically persisted to the database in case the process instance reaches a wait state.

It is also possible to start a process instance using the REST API.

Querying for Process Instances

You can query for all currently running process instances using the ProcessInstanceQuery offered by the RuntimeService:

runtimeService.createProcessInstanceQuery()

.processDefinitionKey("invoice")

.variableValueEquals("creditor", "Nice Pizza Inc.")

.list();

The above query would select all process instances for the invoice process where the creditor is Nice Pizza Inc..

You can also query for process instances using the REST API.

Interacting with a Process Instance

Once you have performed a query for a particular process instance (or a list of process instances), you may want to interact with it. There are multiple possibilities to interact with a process instance, most prominently:

- Triggering it (make it continue execution):

- Through a Message Event

- Through a Signal Event

- Canceling it:

- Using the

RuntimeService.deleteProcessInstance(...)method.

- Using the

If your process uses User Task, you can also interact with the process instance using the TaskService API.

Suspending Process Instances

Suspending a process instance is helpful, if you want ensure that it is not executed any further. For example, if process variables are in an undesired state, you can suspend the instance and change the variables safely.

In detail, suspension means to disallow all actions that change token state (i.e. the activities that are currently executed) of the instance. For example, it is not possible to signal an event or complete a user task for a suspended process instance, as these actions will continue the process instance execution subsequently. Nevertheless, actions like setting or removing variables are still allowed, as they do not change token state.

Also, when suspending a process instance, all tasks belonging to it will be suspended. Therefore, it will no longer be possible to invoke actions that have effects on the task's lifecycle (i.e. user assignment, task delegation, task completion, ...). However, any actions not touching the lifecycle like setting variables or adding comments will still be allowed.

A process instance can be suspended by using the suspendProcessInstanceById(...) method of the RuntimeService. Similarly it can be reactivated again.

If you would like to suspend all process instances of a given process definition, you can use the method suspendProcessDefinitionById(...) of theRepositoryService and specify the suspendProcessInstances option.

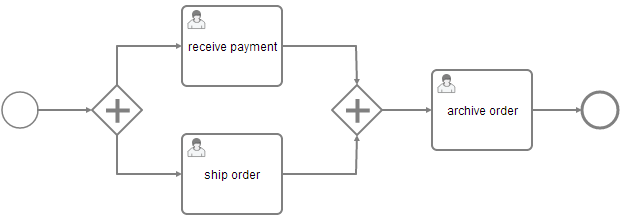

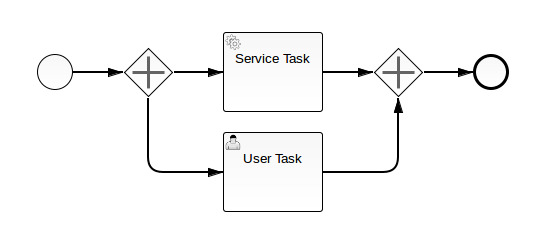

Executions

If your process instance contains multiple execution paths (like for instance after a parallel gateway), you must be able to differentiate the currently active paths inside the process instance. In the following example, two user tasks receive payment and ship order can be active at the same time.

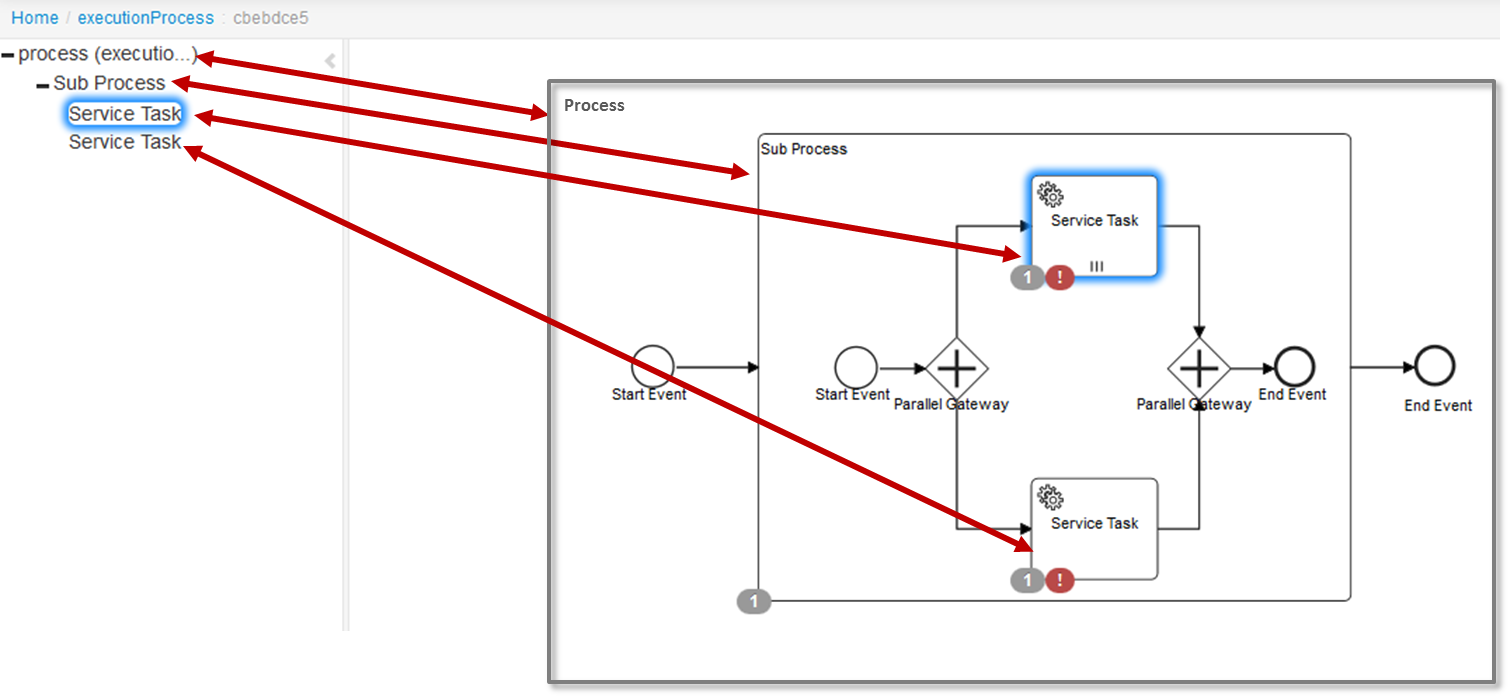

Internally the process engine creates two concurrent executions inside the process instance, one for each concurrent path of execution. Executions are also created for scopes, for example if the process engine reaches a Embedded Sub Process or in case of Multi Instance.

Executions are hierarchical and all executions inside a process instance span a tree, the process instance being the root-node in the tree. Note: the process instance itself is an execution.

Local Variables

Executions can have local variables. Local variables are only visible to the execution itself and its children but not to siblings of parents in the execution tree. Local variables are usually used if a part of the process works on some local data object or if an execution works on one item of a collection in case of multi instance.

In order to set a local variable on an execution, use the setVariableLocal method provided by the runtime service.

runtimeService.setVariableLocal(name, value);

Querying for executions

You can query for executions using the ExecutionQuery offered by the RuntimeService:

runtimeService.createProcessInstanceQuery()

.processInstanceId(someId)

.list();

The above query returns all executions for a given process instance.

You can also query for executions using the REST API.

Activity Instances

The activity instance concept is similar to the execution concept but takes a different perspective. While an execution can be imagined as a token moving through the process, an activity instance represents an individual instance of an activity (task, subprocess, ...). The concept of the activity instance is thus more state-oriented.

Activity instances also span a tree, following the scope structure provided by BPMN 2.0. Activities that are "on the same level of subprocess" (ie. part of the same scope, contained in the same subprocess) will have their activity instances at the same level in the tree

Examples:

- Process with two parallel user tasks after parallel Gateway: in the activity instance tree you will see two activity instances below the root instance, one for each user task.

- Process with two parallel Multi Instance user tasks after parallel Gateway: in the activity instance tree, all instances of both user tasks will be listed below the root activity instance. Reason: all activity instances are at the same level of subprocess.

- Usertask inside embedded subprocess: the activity instance three will have 3 levels: the root instance representing the process instance itself, below it an activity instance representing the instance of the embedded subprocess, and below this one, the activity instance representing the usertask.

Retrieving an Activity Instance

Currently activity instances can only be retrieved for a process instance:

ActivityInstance rootActivityInstance = runtimeService.getActivityInstance(processInstance.getProcessInstanceId());

You can retrieve the activity instance tree using the REST API as well.

Identity & Uniqueness

Each activity instance is assigned a unique Id. The id is persistent, if you invoke this method multiple times, the same activity instance ids will be returned for the same activity instances. (However, there might be different executions assigned, see below)

Relation to Executions

The Execution concept in the process engine is not completely aligned with the activity instance concept because the execution tree is in general not aligned with the activity / scope concept in BPMN. In general, there is a n-1 relationship between Executions and ActivityInstances, ie. at a given point in time, an activity instance can be linked to multiple executions. In addition, it is not guaranteed that the same execution that started a given activity instance will also end it. The process engine performs several internal optimizations concerning the compacting of the execution tree which might lead to executions being reordered and pruned. This can lead to situations where a given execution starts an activity instance but another execution ends it. Another special case is the process instance: if the process instance is executing a non-scope activity (for example a user task) below the process definition scope, it will be referenced by both the root activity instance and the user task activity instance.

Note: If you need to interpret the state of a process instance in terms of a BPMN process model, it is usually easier to use the activity instance tree as opposed to the execution tree.

Jobs and Job Definitions

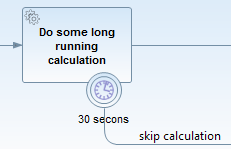

The camunda process engine includes a component named the Job Executor. The Job Executor is scheduling component responsible for performing asynchronous background work. Consider the example of a Timer Event: whenever the process engine reached the timer event, it will stop execution, persist the current state to the database and create a job to resume execution in the future. A job has a duedate which is calculated using the timer expression provided in BPMN XML.

When a process is deployed, the process engine creates a Job Definition for each activity in the process which will create jobs at runtime. This allows you to query information about timers and asynchronous continuations in your processes.

Querying for jobs

Using the management service, you can query for jobs. The following selects all jobs which are due after a certain date:

managementService.createJobQuery()

.duedateHigherThan(someDate)

.list()It is possible to query for jobs using the REST Api.

Querying for Job Definitions

Using the management service, you can also query for job definitions. The following selects all job definitions form a specific process definition:

managementService.createJobDefinitionQuery()

.processDefinitionKey("orderProcess")

.list()The result will contain information about all timers and asynchronous continuations in the order process.

It is possible to query for jobs using the REST Api.

Suspending and Activating Job Execution

Job suspension prevents jobs from being executed. Suspension of job execution can be controlled on different levels:

- Job Instance Level: individual Jobs can be suspended either directly through the

managementService.suspendJob(...)API or transitively when suspending a Process Instance or a Job Definition. - Job Definition Level: all instances of a certain Timer or Activity can be suspended.

Job suspension by Job definition allows you to suspend all instances of a certain timer or an asynchronous continuation. Intuitively, this allows you to suspend a certain activity in a process in a way that all process instances will advance until they have reached this activity and then not continue since the activity is suspended.

Let's assume there is a process deployed with key orderProcess which contains a service task named processPayment. The service task has an asynchronous continuation configured which causes it to be executed by the job executor. The following example shows how you can prevent the processPayment service from being executed:

List<JobDefinition> jobDefinitions = managementService.createJobDefinitionQuery()

.processDefinitionKey("orderProcess")

.activityIdIn("processPayment")

.list();

for (JobDefinition jobDefinition : jobDefinitions) {

managementService.suspendJobDefinitionById(jobDefinition.getId(), true);

}Delegation Code

Delegation Code allows you to execute external Java code or evaluate expressions when certain events occur during process execution.

There are different types of Delegation Code:

- Java Delegates can be attached to a BPMN ServiceTask.

- Execution Listeners can be attached to any event within the normal token flow, e.g. starting a process instance or entering an activity.

- Task Listeners can be attached to events within the user task lifecycle, e.g. creation or completion of a user task.

You can create generic delegation code and configure this via the BPMN 2.0 XML using so called Field Injection.

Java Delegate

To implement a class that can be called during process execution, this class needs to implement the org.camunda.bpm.engine.delegate.JavaDelegate

interface and provide the required logic in the execute

method. When process execution arrives at this particular step, it

will execute this logic defined in that method and leave the activity

in the default BPMN 2.0 way.

Let's create for example a Java class that can be used to change a

process variable String to uppercase. This class needs to implement

the org.camunda.bpm.engine.delegate.JavaDelegate

interface, which requires us to implement the execute(DelegateExecution)

method. It's this operation that will be called by the engine and

which needs to contain the business logic. Process instance

information such as process variables and other can be accessed and

manipulated through the DelegateExecution interface (click on the link for

a detailed Javadoc of its operations).

public class ToUppercase implements JavaDelegate {

public void execute(DelegateExecution execution) throws Exception {

String var = (String) execution.getVariable("input");

var = var.toUpperCase();

execution.setVariable("input", var);

}

}

Note: there will be only one instance of that Java class created for the serviceTask it is

defined on. All process-instances share the same class instance that

will be used to call

The classes that are referenced in the process definition (i.e. by using

camunda:class ) are NOT instantiated

during deployment. Only when a process execution arrives for the

first time at the point in the process where the class is used, an

instance of that class will be created. If the class cannot be found,

an ProcessEngineException will be thrown. The reasoning for this is that the environment (and

more specifically the classpath) when you are deploying is often different from the actual runtime

environment.

Activity Behavior

Instead of writing a Java Delegate is also possible to provide a class that implements the org.camunda.bpm.engine.impl.pvm.delegate.ActivityBehavior

interface. Implementations have then access to the more powerful ActivityExecution that for example also allows to influence the control flow of the process. Note however

that this is not a very good practice, and should be avoided as much as possible. So, it is advised to use the ActivityBehavior interface only for advanced use cases and if you know exactly what

you're doing.

Field Injection

It's possible to inject values into the fields of the delegated classes. The following types of injection are supported:

- Fixed string values

- Expressions

If available, the value is injected through a public setter method on

your delegated class, following the Java Bean naming conventions (e.g.

field firstName has setter setFirstName(...)).

If no setter is available for that field, the value of private

member will be set on the delegate (but using private fields is highly not recommended - see warning below).

Regardless of the type of value declared in the process-definition, the type of the

setter/private field on the injection target should always be org.camunda.bpm.engine.delegate.Expression.

Private fields cannot always be modified! It is not working with e.g. CDI beans (because you have proxies instead of real objects) or with some SecurityManager configurations. Please always use a public setter-method for the fields you want to have injected!

The following code snippet shows how to inject a constant value into a field.

Field injection is supported when using the extensionElements XML element before the actual field injection

declarations, which is a requirement of the BPMN 2.0 XML Schema.

<serviceTask id="javaService"

name="Java service invocation"

camunda:class="org.camunda.bpm.examples.bpmn.servicetask.ToUpperCaseFieldInjected">

<extensionElements>

<camunda:field name="text" stringValue="Hello World" />

</extensionElements>

</serviceTask>

The class ToUpperCaseFieldInjected has a field

text which is of type org.camunda.bpm.engine.delegate.Expression.

When calling text.getValue(execution), the configured string value

Hello World will be returned.

Alternatively, for longs texts (e.g. an inline e-mail) the

<serviceTask id="javaService"

name="Java service invocation"

camunda:class="org.camunda.bpm.examples.bpmn.servicetask.ToUpperCaseFieldInjected">

<extensionElements>

<camunda:field name="text">

<camunda:string>

Hello World

</camunda:string>

</camunda:field>

</extensionElements>

</serviceTask>

To inject values that are dynamically resolved at runtime, expressions

can be used. Those expressions can use process variables, CDI or Spring

beans. As already noted, an instance of the Java class is shared among

all process-instances in a service task. To have dynamic injection of

values in fields, you can inject value and method expressions in a

org.camunda.bpm.engine.delegate.Expression

which can be evaluated/invoked using the DelegateExecution

passed in the execute method.

<serviceTask id="javaService" name="Java service invocation"

camunda:class="org.camunda.bpm.examples.bpmn.servicetask.ReverseStringsFieldInjected">

<extensionElements>

<camunda:field name="text1">

<camunda:expression>${genderBean.getGenderString(gender)}</camunda:expression>

</camunda:field>

<camunda:field name="text2">

<camunda:expression>Hello ${gender == 'male' ? 'Mr.' : 'Mrs.'} ${name}</camunda:expression>

</camunda:field>

</ extensionElements>

</ serviceTask>

The example class below uses the injected expressions and resolves

them using the current DelegateExecution.

public class ReverseStringsFieldInjected implements JavaDelegate {

private Expression text1;

private Expression text2;

public void execute(DelegateExecution execution) {

String value1 = (String) text1.getValue(execution);

execution.setVariable("var1", new StringBuffer(value1).reverse().toString());

String value2 = (String) text2.getValue(execution);

execution.setVariable("var2", new StringBuffer(value2).reverse().toString());

}

}

Alternatively, you can also set the expressions as an attribute instead of a child-element, to make the XML less verbose.

<camunda:field name="text1" expression="${genderBean.getGenderString(gender)}" />

<camunda:field name="text1" expression="Hello ${gender == 'male' ? 'Mr.' : 'Mrs.'} ${name}" />

Since the Java class instance is reused, the injection only happens once, when the serviceTask is called the first time. When the fields are altered by your code, the values won't be re-injected so you should treat them as immutable and don't make any changes to them.

Execution Listener

Execution listeners allow you to execute external Java code or evaluate an expression when certain events occur during process execution. The events that can be captured are:

- Start and ending of a process instance.

- Taking a transition.

- Start and ending of an activity.

- Start and ending of a gateway.

- Start and ending of intermediate events.

- Ending an start event or starting an end event.

The following process definition contains 3 execution listeners:

<process id="executionListenersProcess">

<extensionElements>

<camunda:executionListener

event="start"

class="org.camunda.bpm.examples.bpmn.executionlistener.ExampleExecutionListenerOne" />

</extensionElements>

<startEvent id="theStart" />

<sequenceFlow sourceRef="theStart" targetRef="firstTask" />

<userTask id="firstTask" />

<sequenceFlow sourceRef="firstTask" targetRef="secondTask">

<extensionElements>

<camunda:executionListener

class="org.camunda.bpm.examples.bpmn.executionListener.ExampleExecutionListenerTwo" />

</extensionElements>

</sequenceFlow>

<userTask id="secondTask">

<extensionElements>

<camunda:executionListener expression="${myPojo.myMethod(execution.event)}" event="end" />

</extensionElements>

</userTask>

<sequenceFlow sourceRef="secondTask" targetRef="thirdTask" />

<userTask id="thirdTask" />

<sequenceFlow sourceRef="thirdTask" targetRef="theEnd" />

<endEvent id="theEnd" />

</process>The first execution listener is notified when the process starts. The listener is an external Java-class (like ExampleExecutionListenerOne) and should implement the org.camunda.bpm.engine.delegate.ExecutionListener interface. When the event occurs (in this case end event) the method notify(DelegateExecution execution) is called.

public class ExampleExecutionListenerOne implements ExecutionListener {

public void notify(DelegateExecution execution) throws Exception {

execution.setVariable("variableSetInExecutionListener", "firstValue");

execution.setVariable("eventReceived", execution.getEventName());

}

}It is also possible to use a delegation class that implements the org.camunda.bpm.engine.delegate.JavaDelegate interface. These delegation classes can then be reused in other constructs, such as a delegation for a serviceTask.

The second execution listener is called when the transition is taken. Note that the listener element doesn't define an event, since only take events are fired on transitions. Values in the event attribute are ignored when a listener is defined on a transition.

The last execution listener is called when activity secondTask ends. Instead of using the class on the listener declaration, a expression is defined instead which is evaluated/invoked when the event is fired.

<camunda:executionListener expression="${myPojo.myMethod(execution.eventName)}" event="end" />As with other expressions, execution variables are resolved and can be used. Because the execution implementation object has a property that exposes the event name, it's possible to pass the event-name to your methods using execution.eventName.

Execution listeners also support using a delegateExpression, similar to a service task.

<camunda:executionListener event="start" delegateExpression="${myExecutionListenerBean}" />Task Listener

A task listener is used to execute custom Java logic or an expression upon the occurrence of a certain task-related event.

A task listener can only be added in the process definition as a child element of a user task. Note that this also must happen as a child of the BPMN 2.0 extensionElements and in the camunda namespace, since a task listener is a construct specific for the camunda engine.

<userTask id="myTask" name="My Task" >

<extensionElements>

<camunda:taskListener event="create" class="org.camunda.bpm.MyTaskCreateListener" />

</extensionElements>

</userTask>A task listener supports following attributes:

- event (required): the type of task event on which the task listener will be invoked. Possible events are:

- create: occurs when the task has been created an all task properties are set.

- assignment: occurs when the task is assigned to somebody. Note: when process execution arrives in a userTask, first an assignment event will be fired, before the create event is fired. This might seem an unnatural order, but the reason is pragmatic: when receiving the create event, we usually want to inspect all properties of the task including the assignee.

- complete: occurs when the task is completed and just before the task is deleted from the runtime data.

- delete: occurs when the task is delete and just before the task is deleted from the runtime data.

class: the delegation class that must be called. This class must implement the

org.camunda.bpm.engine.impl.pvm.delegate.TaskListenerinterface.public class MyTaskCreateListener implements TaskListener { public void notify(DelegateTask delegateTask) { // Custom logic goes here } }It is also possible to use field injection to pass process variables or the execution to the delegation class. Note that an instance of the delegation class is created upon process deployment (as is the case with any class delegation in the engine), which means that the instance is shared between all process instance executions.

expression: (cannot be used together with the class attribute): specifies an expression that will be executed when the event happens. It is possible to pass the DelegateTask object and the name of the event (using task.eventName) as parameter to the called object.

<camunda:taskListener event="create" expression="${myObject.callMethod(task, task.eventName)}" />delegateExpression: allows to specify an expression that resolves to an object implementing the TaskListener interface, similar to a service task.

<camunda:taskListener event="create" delegateExpression="${myTaskListenerBean}" />

Field Injection on Listener

When using listeners configured with the class attribute, field injection can be applied. This is exactly the same mechanism as described for Java Delegates, which contains an overview of the possibilities provided by field injection.

The fragment below shows a simple example process with an execution listener with fields injected:

<process id="executionListenersProcess">

<extensionElements>

<camunda:executionListener class="org.camunda.bpm.examples.bpmn.executionListener.ExampleFieldInjectedExecutionListener" event="start">

<camunda:field name="fixedValue" stringValue="Yes, I am " />

<camunda:field name="dynamicValue" expression="${myVar}" />

</camunda:executionListener>

</extensionElements>

<startEvent id="theStart" />

<sequenceFlow sourceRef="theStart" targetRef="firstTask" />

<userTask id="firstTask" />

<sequenceFlow sourceRef="firstTask" targetRef="theEnd" />

<endEvent id="theEnd" />

</process>The actual listener implementation may look like the following:

public class ExampleFieldInjectedExecutionListener implements ExecutionListener {

private Expression fixedValue;

private Expression dynamicValue;

public void notify(DelegateExecution execution) throws Exception {

String value =

fixedValue.getValue(execution).toString() +

dynamicValue.getValue(execution).toString();

execution.setVariable("var", value);

}

}The class ExampleFieldInjectedExecutionListener concatenates the 2 injected fields (one fixed an the other dynamic) and stores this in the process variable var.

@Deployment(resources = {

"org/camunda/bpm/examples/bpmn/executionListener/ExecutionListenersFieldInjectionProcess.bpmn20.xml"

})

public void testExecutionListenerFieldInjection() {

Map<String, Object> variables = new HashMap<String, Object>();

variables.put("myVar", "listening!");

ProcessInstance processInstance = runtimeService.startProcessInstanceByKey("executionListenersProcess", variables);

Object varSetByListener = runtimeService.getVariable(processInstance.getId(), "var");

assertNotNull(varSetByListener);

assertTrue(varSetByListener instanceof String);

// Result is a concatenation of fixed injected field and injected expression

assertEquals("Yes, I am listening!", varSetByListener);

}Accessing process engine services

It is possible to access the public API services (RuntimeService, TaskService, RepositoryService ...) from delegation code. The following is an example showing

how to access the TaskService from a JavaDelegate implementation.

public class DelegateExample implements JavaDelegate {

public void execute(DelegateExecution execution) throws Exception {

TaskService taskService = execution.getProcessEngineServices().taskService();

taskService.createTaskQuery()...;

}

}Throwing BPMN Errors from Delegation Code

In the above example the error event is attached to a Service Task. In order to get this to work the Service Task has to throw the corresponding error. This is done by using a provided Java exception class from within your Java code (e.g. in the JavaDelegate):

public class BookOutGoodsDelegate implements JavaDelegate {

public void execute(DelegateExecution execution) throws Exception {

try {

...

} catch (NotOnStockException ex) {

throw new BpmnError(NOT_ON_STOCK_ERROR);

}

}

}Process Versioning

Versioning of process definitions

Business Processes are by nature long running. The process instances will maybe last for weeks, or months. In the meantime the state of the process instance is stored to the database. But sooner or later you might want to change the process definition even if there are still running instances.

This is supported by the process engine:

- If you redeploy a changed process definition you get a new version in the database.

- Running process instance will keep running in the version they were started with.

- New process instances will run in the new version - unless specified explicitly.

- Support for migrating process instances to new a version is supported within certain limits.

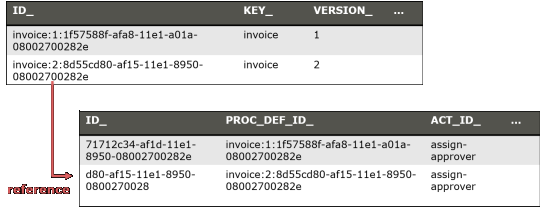

So you can see different version in the process definition table and the process instances are linked to this:

Which version will be used

When you start a process instance

- by key: It starts an instance of the latest deployed version of the process definition with the key.

- by id: It starts an instance of the deployed process definition with the database id. By using this you can start a specific version.

The default and recommended usage is to just use startProcessInstanceByKey and always use the latest version:

processEngine.getRuntimeService().startProcessInstanceByKey("invoice");

// will use the latest version (2 in our example)

If you want to specifically start an instance of an old process definition, use a Process Definition Query to find the correct ProcessDefinition id and startProcessInstanceById:

ProcessDefinition pd = processEngine.getRepositoryService().createProcessDefinitionQuery()

.processDefinitionKey("invoice")

.processDefinitionVersion(1).singleResult();

processEngine.getRuntimeService().startProcessInstanceById(pd.getId());

When you use BPMN CallActivities you can configure which version is used:

<callActivity id="callSubProcess" calledElement="checkCreditProcess"

camunda:calledElementBinding="latest|deployment|version"

camunda:calledElementVersion="17">

</callActivity>

The options are

- latest: use the latest version of the process definition (as with

startProcessInstanceByKey). - deployment: use the process definition in the version matching the version of the calling process. This works if they are deployed within one deployment - as then they are always versioned together (see Process Application Deployment for more details).

- version: specify the version hard coded in the XML.

Key vs. ID of a process definition

You might have spotted that two different columns exist in the process definition table with different meanings:

Key: The key is the unique identifier of the process definition in the XML, so its value is read from the id attribute in the XML:

<bpmn2:process id="invoice" ...Id: The id is the database primary key and an artificial key normally combined out of the key, the version and a generated id (note that the ID may be shortened to fit into the database column, so there is no guarantee that the id is built this way).

Version Migration

Sometimes it is necessary to migrate (upgrade) running process instances to a new version, maybe you added an important new task or even fixed a bug. In this case we can migrate the running process instances to the new version.

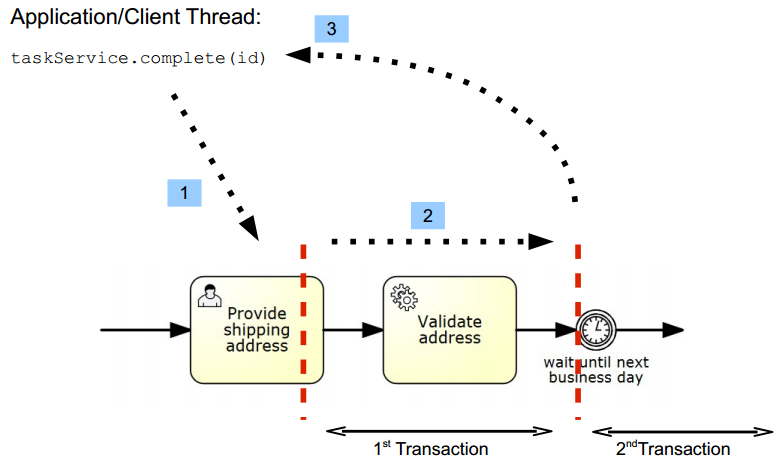

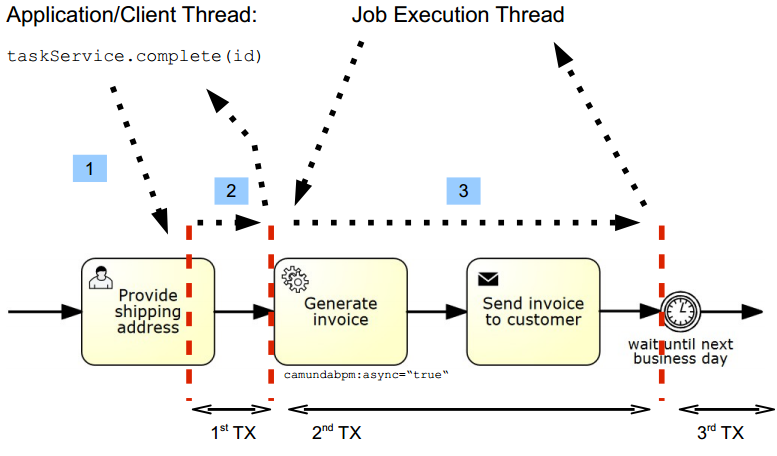

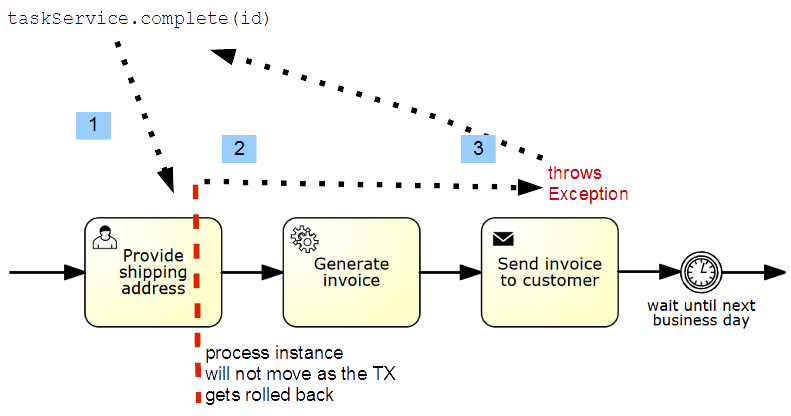

Please not that migration can only be applied if a process instance is currently in a persistent wait state, see Transactions in Processes.

public void migrateVersion() {

String processInstanceId = "71712c34-af1d-11e1-8950-08002700282e";

int newVersion = 2;

SetProcessDefinitionVersionCmd command =

new SetProcessDefinitionVersionCmd(processInstanceId, newVersion);

((ProcessEngineImpl) ProcessEngines.getDefaultProcessEngine())

.getProcessEngineConfiguration()

.getCommandExecutorTxRequired().execute(command);

}

Risks and limitations of Version Migration

Process Version Migration is not an easy topic on itself. Migrating process instances to a new version works only if:

- for all currently existing executions and running tokens

- the "current activity" with the same id still exists in the new process definition

- and the scopes, sub executions, jobs and so on are still valid.

Hence the cases, in which this simple instance migration works, are limited. The following examples will cause problems: If the new version introduces a new (message / signal / timer) boundary event attached to an activity, process instances which are waiting at this activity cannot be migrated (since the activity is a scope in the new version and not a scope in the old version).

- If the new version introduces a new (message / signal / timer) boundary event attached to a subprocess, process instances which are waiting in an activity contained by the subprocess can be migrated, but the event will never trigger (event subscription / timer not created when entering the scope).

- If the new version removes a (message / signal / timer) boundary event attached to an activity, process instances which are waiting at this activity cannot be migrated.

- If the new version removes a timer boundary event attached to a subprocess, process instances which are waiting at an activity contained by the subprocess can be migrated. If the timer job is triggered (executed by the job executor) it will fail. The timer job is removed with the scope execution.

- If the new version removes a signal or message boundary event attached to a subprocess, process instances which are waiting at an activity contained by the subprocess can be migrated.The signal/message subscription already exists but cannot be trigged anymore. The subscription is removed with the scope execution.

- If a new version changes field injection on Java classes, you might want to set attributes on a Java class which doesn't exist any more or the other way round: you are missing attributes.

Other important aspects to think of when doing version migration are:

- Execution: Migration can lead to situations where some activities from the old or new process definition might have never been executed for some process instances. Keep this in mind, you might have to deal with this in some of your own migration scripts.

- Traceability and Audit Trail: Is the produced audit trail still valid if some entries point to version 1 and some to version 2? Do all activities still exist in the new process definition?

- Reporting: Your reports may be broken or showing strange figures if they get confused by version mishmash.

- KPI Monitoring: Let's assume you introduced new KPI's, for migrated process instances you might get only parts of the figures. Does this do any harm to your monitoring?

If you cannot migrate your process instance you have a couple of alternatives, for example:

- Keep running the old version (as described at the beginning).

- Cancel the old process instance and start a new one. The challenge might be to skip activities already executed and "jump" to the right wait state. This is currently a difficult task, you could maybe leverage Message Start Events here. We are currently discussing to provide more support on this in Migration Points. Sometimes you can skip this by adding some magic to your code or deploy some mocks during a migration phase or by another creative solution.

- Cancel the old process instances and start a new one in a completely customized migration process definition.

So there is actually not "the standard" way, in doubt discuss with us right solution for your environment.

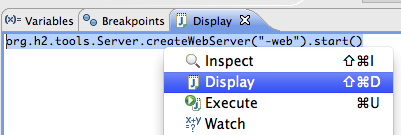

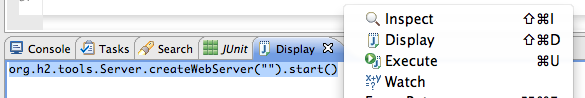

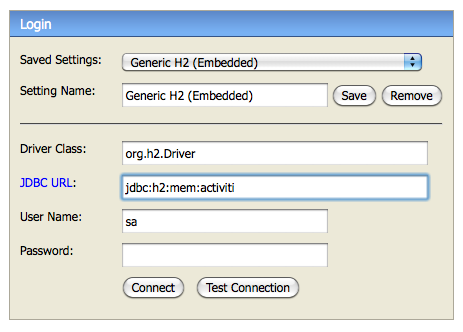

Database Configuration

There are two ways to configure the database that the camunda engine will use. The first option is to define the JDBC properties of the database:

jdbcUrl: JDBC URL of the database.jdbcDriver: implementation of the driver for the specific database type.jdbcUsername: username to connect to the database.jdbcPassword: password to connect to the database.

Note that internally the engine uses Apache MyBatis for persistence.

The data source that is constructed based on the provided JDBC properties will have the default MyBatis connection pool settings. The following attributes can optionally be set to tweak that connection pool (taken from the MyBatis documentation):

jdbcMaxActiveConnections: The number of active connections that the connection pool at maximum at any time can contain. Default is 10.jdbcMaxIdleConnections: The number of idle connections that the connection pool at maximum at any time can contain.jdbcMaxCheckoutTime: The amount of time in milliseconds a connection can be 'checked out' from the connection pool before it is forcefully returned. Default is 20000 (20 seconds).jdbcMaxWaitTime: This is a low level setting that gives the pool a chance to print a log status and re-attempt the acquisition of a connection in the case that it's taking unusually long (to avoid failing silently forever if the pool is mis-configured) Default is 20000 (20 seconds).

Example database configuration:

<property name="jdbcUrl" value="jdbc:h2:mem:camunda;DB_CLOSE_DELAY=1000" />

<property name="jdbcDriver" value="org.h2.Driver" />

<property name="jdbcUsername" value="sa" />

<property name="jdbcPassword" value="" />

Alternatively, a javax.sql.DataSource implementation can be used (e.g. DBCP from Apache Commons):

<bean id="dataSource" class="org.apache.commons.dbcp.BasicDataSource" >

<property name="driverClassName" value="com.mysql.jdbc.Driver" />

<property name="url" value="jdbc:mysql://localhost:3306/camunda" />

<property name="username" value="camunda" />

<property name="password" value="camunda" />

<property name="defaultAutoCommit" value="false" />

</bean>

<bean id="processEngineConfiguration" class="org.camunda.bpm.engine.impl.cfg.StandaloneProcessEngineConfiguration">

<property name="dataSource" ref="dataSource" />

...

Note that camunda does not ship with a library that allows to define such a data source. So you have to make sure that the libraries (e.g. from DBCP) are on your classpath.

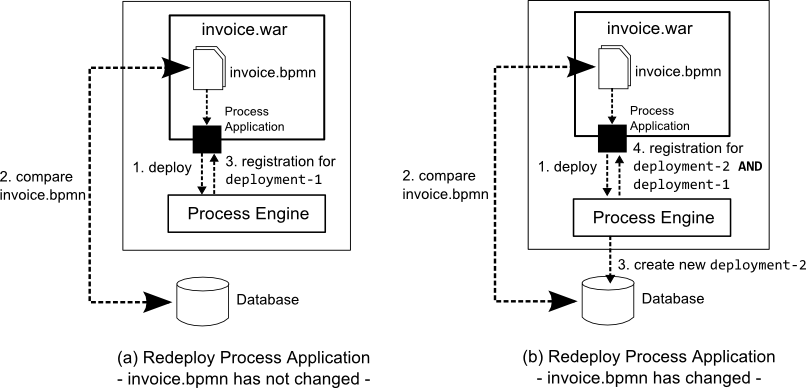

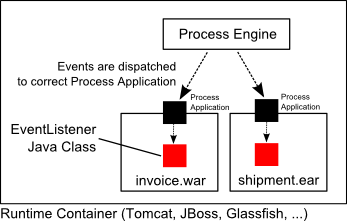

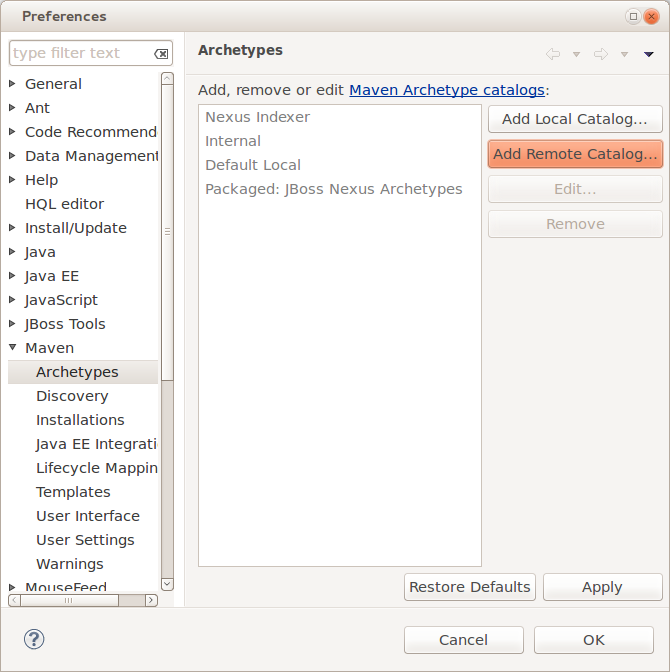

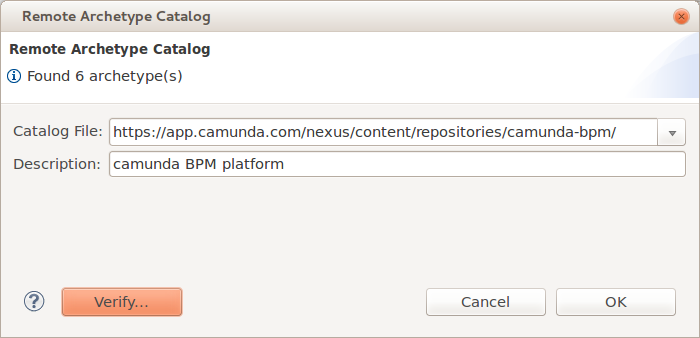

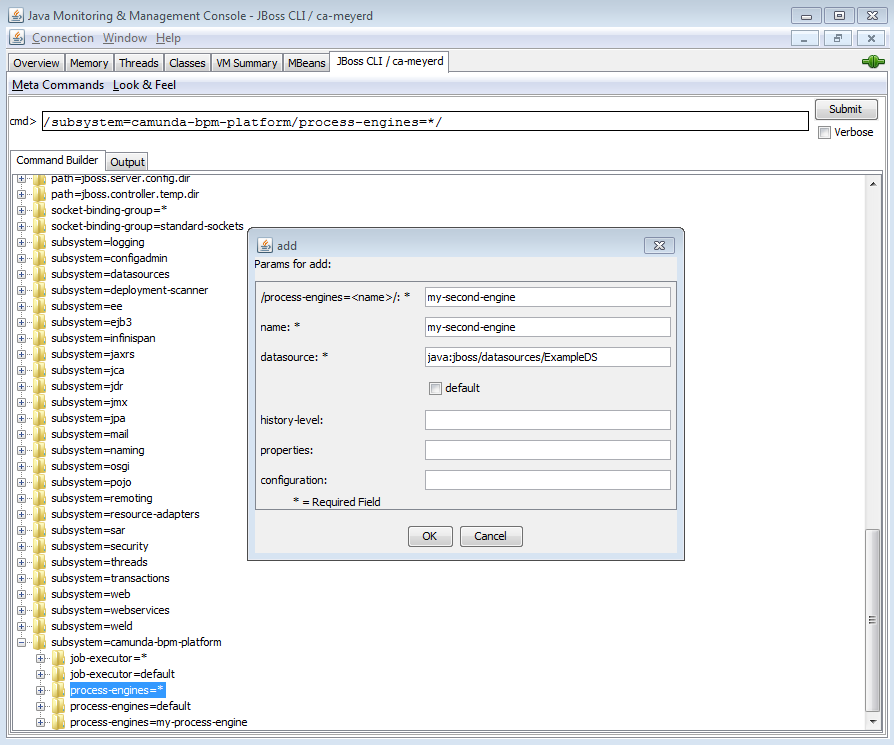

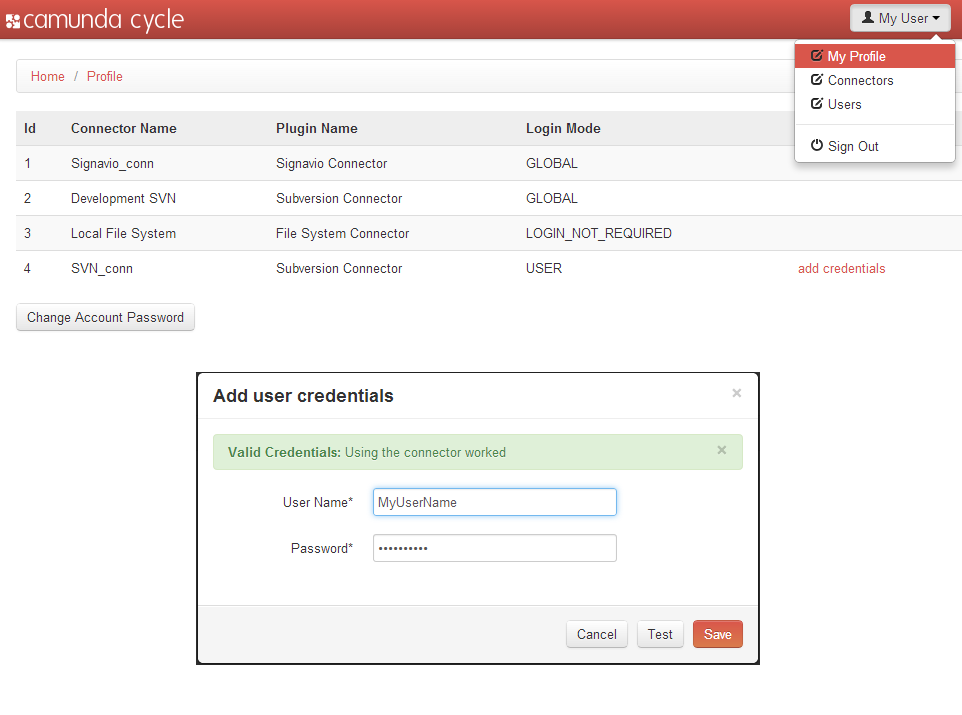

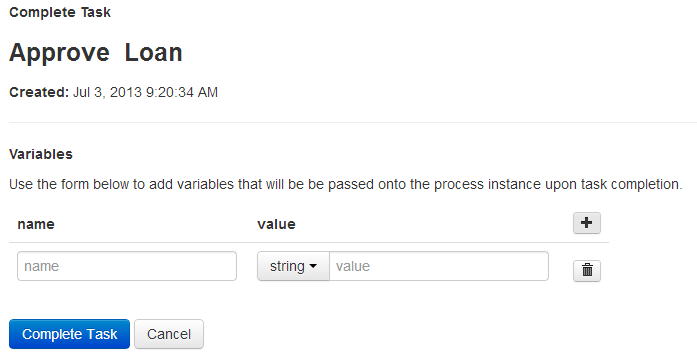

The following properties can be set, regardless of whether you are using the JDBC or data source approach: